More about AWS EBS

- Amazon EBS Elastic Volumes in Cloud Volumes ONTAP

- AWS EBS Multi-Attach Volumes and Cloud Volumes ONTAP iSCSI

- AWS EBS: A Complete Guide and Five Cool Functions You Should Start Using

- AWS Snapshot Automation for Backing Up EBS Volumes

- How to Clean Up Unused AWS EBS Volumes with A Lambda Function

- Boost your AWS EBS performance with Cloud Volumes ONTAP

- Are You Getting Everything You Can from AWS EBS Volumes?: Optimizing Your Storage Usage

- EBS Pricing and Performance: A Comparison with Amazon EFS and Amazon S3

- Cloning Amazon EBS Volumes: A Solution to the AWS EBS Cloning Problem

- The Largest Block Storage Volumes the Public Cloud Has to Offer: AWS EBS, Azure Disks, and More

- Storage Tiering between AWS EBS and Amazon S3 with NetApp Cloud Volumes ONTAP

- Lowering Disaster Recovery Costs by Tiering AWS EBS Data to Amazon S3

- 3 Tips for Optimizing AWS EBS Performance

- AWS Instance Store Volumes & Backing Up Ephemeral Storage to AWS EBS

- AWS EBS and S3: Object Storage Vs. Block Storage in the AWS Cloud

Subscribe to our blog

Thanks for subscribing to the blog.

November 14, 2017

Topics: Cloud Volumes ONTAP DevOpsData CloningAWS8 minute read

The world is changing. Digitalization is creeping into every aspect of our lives. Computers are everywhere. Just look at all the things our blog has to say about AWS EBS.

You might not see them, but micro-controllers have become so incredibly cheap and powerful that we can afford to put them into nearly everything. Today, a light switch (almost) has more processing power than my first computer (I am a C64 child).

Only by writing tests in software itself and automating the heck out of it, modern code bases are able to progress at such a speed and reliability like we have seen in the past 10 years.This also means we have an incredible amount of software running around us. Software that is being enhanced at an increasing pace by more and more people. A foundation to this increased speed in software agility is automated testing.

Why is Cloning Much More Relevant Today?

Today, an important metric for many projects is how many tests you can run against a code base per hour. The more tests run, the higher the agility and the code base can progress faster.

Most applications have a state (like databases or persistent data), and many tests run application codes which alter the state. Your test is successful if the new state is the one you expected, or, consequently, your test was unsuccessful if you get unexpected results.

Because of this, each test needs to start from a well-defined state. This means that your testbed has to reset your data files and databases to the well-defined state. You should get a “golden copy” to use as a template.

To create your test copy, you must copy the golden copy. This process of copying takes time. Depending on the size of your data set, the copy part can take up the majority of the whole test runtime. This limits the number of tests per hour.

Copying also consumes more space. Ideally, you should provide a multitude of the capacity required for your dataset. However, more capacity required means more money spent.

A good cloning technology will eliminate these problems and massively boost your test agility. With the right technology, cloning only involves some pointer magic and a bit of metadata massage.

The process becomes nearly instantaneous and only consumes a very small amount of storage for additional metadata. The dataset itself is untouched and only data that has changed (in the clone or the parent) consumes additional capacity. Clone a big database multiple times and you will easily save dozens of terabytes worth of capacity while also being considerably faster.

After witnessing such cloning technology in action, you will surely wonder how you have put up with old data management technology for so long.

Since we are the cloud guys, we will explore how we can uncover the hidden cloning treasure in a perfect place to run test/dev workloads, called Amazon AWS.

Cloning Amazon EBS Volumes in Amazon AWS

How it works:

Ironically, there isn’t any official functionality in AWS to clone Amazon EBS volumes. The platform simply doesn’t offer it.

Either you must:

- Copy the volume manually — This might take a long time depending on the amount of data.

- Google search for “clone EBS volumes” — You will find some receipts on typical sites like StackOverflow, or on the AWS forums.

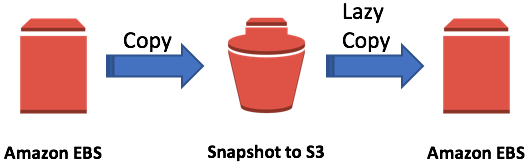

You will find recommendations on how to create a snapshot of the EBS volume on S3. This snapshot can then be used to create a new volume.

Voila, you've just created a clone!

Note:

While taking a snapshot of the EBS volume feels instantaneous (the operation returns quickly), it involves copying the volume's data to an S3 object. This can take anywhere from minutes to hours to finalize, depending on the size of data. Only then can you create a new volume out of the snapshot. This process is very quick and yields a new EBS volume with the cloned data to be used.

A Behind-the-Scene Look

To enable this quick provisioning, Amazon uses an approach called lazy copy. The volume is initialized with some fundamental metadata, but the data itself isn’t copied to the volume before the operation returns. Instead, the data gets copied from the snapshot to the volume block wise when the data is accessed.

Of course, this incurs S3 like latencies (10s of milliseconds) instead of the good latencies of normal EBS GP2 volumes (around 1ms). Such high latencies render many applications (e.g. a database) unusable. This defies the whole value of cloning. You may be better off copying the data.

Interesting Statistics

To put some real numbers behind this, I ran a test. The goal of the test was to see how latencies change between an EBS volume and an EBS volume created from an S3 snapshot.

To run the test, I applied a steady workload well within the normal operating parameters of my environment and stayed away from potential bottlenecks. I chose to apply a database like workload (mix of sequential and random, read and write with 8/16/64/256k blocksizes) using vdbench.

I used an m4.xlarge EC2 instance (16GB RAM, latest Amazon AMI) and a 100GB EBS GP2 volume (= 300 guaranteed IOPS). The 100GB volumes contained a 50GB database file, which is 3 times the size of the instance's main memory (the working set was reasonably bigger than the memory to avoid heavy cache effects).

To avoid random results owing to GP2 IO bursting, I applied only 250 IOPS so that the workload always stayed within the guaranteed IO envelope of 300 IOPS. Each test ran for 300s after the 20s warmup time. When running this workload against the plain GP2 volume, the reported latency was 0.8 ms, which was quite good.

I then snapshotted the volume (create-snapshot). While the operation returned quickly, it continued in the background and it took 10m30s for the snapshot to complete. Only then was I able to create a new volume out of the snapshot (create-volume with snapshot-id), which took only 10s.

With this volume (same size, same data), I repeated the same test. Now, the latency was 628ms. We are talking about latencies well beyond half a second.

Latencies are expected to improve over time if more and more blocks are read back from S3 to the volume. And indeed, as a result of running the test for an hour instead of 5 minutes, the latencies went down into the 400ms region (still beyond usable).

But again, this was for a very small workload of 50GB. Using this approach for bigger datasets in the 1TB+ region seems completely out of scope.

To be fair, AWS isn’t positioning S3 snapshots as a means to create clones. In fact, the recommend way to “initialize” EBS volumes created from snapshots is by reading all the data once. I gave their recommendations a try. But even the FIO approach took very, very long.

Summarising the Approach

Creating the clone took more than 10 minutes. The average IO latency on the clone was over 600 times higher than the parent volume. I think it is fair so say that, in this case, you are better off copying the data.

Cloud Volumes ONTAP to the Rescue

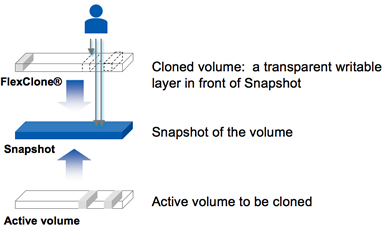

Fortunately, Cloud Volumes ONTAP offers an effective solution to the cloning problem. Cloud Volumes ONTAP is a Software-Defined-Storage solution that can be deployed on Amazon AWS or Microsoft Azure. ONTAP itself is the industry-leading storage operating system and offers a smart storage virtualization layer which can do instant, performant and storage efficient snapshotting and cloning of data sets, independent of the data set size.

Simply provision Cloud Volumes ONTAP, create a volume and mount it via NFS to your Linux VM, or create a LUN and connect it via iSCSI to your Windows/Linux host. Then, put your applications data on it. Cloud Volumes ONTAP will allow you to create instant clones using FlexClone®. Mount the clone and voila, it simply works! And the speed is the same as with the parent volume.

The cool part is that neither Cloud Volumes ONTAP snapshots nor clones use up any capacity (apart from some metadata), until you start modifying the parent or the clone and the data diverges. Even then it will only store changed blocks (4k), again without any performance penalty. There is no better way to clone Amazon EBS volumes.

You not only get instant, performance-neutral clones, but you also save money by not paying for extra Amazon S3 capacity for the snapshot or for an additional, full-size AWS EBS volume for the clone. And the good thing: The more snapshots and clones you create, the bigger the money saving will be. NetApp FlexClones volumes will save you time and money- It’s proven. We're scaling data management to change the world with data.