Subscribe to our blog

Thanks for subscribing to the blog.

November 11, 2018

Topics: 6 minute read

Analytics is becoming a major driver for organizations to move to the public cloud, due to its scalability. For example, while talking with a group of large retailers recently, understanding and targeting their customers with better offerings was becoming a key digital transformation initiative, in order to increase revenue and profitability. To better do so, most were looking to consolidate extensive customer data locked in on-premise data warehouses into even more massive data lakes in AWS, Azure or Google Cloud that could be analyzed at scale.

The cloud appears ideal for big data, particularly with low cost options for cloud storage. However, users need to be aware of cost and performance tradeoffs, as well as hidden costs that may lead to suboptimal outcomes. This article looks at how NetApp Cloud Volumes Service can give you the best of both cost and performance.

Cloud Storage Has Cost and Performance Tradeoffs

Object storage, such as Amazon S3, is massively scalable and durable for maintaining a large data lake. Compared to higher-performance storage like AWS EBS block storage, Amazon S3 can be extremely cost effective to store large data sets. With the known performance tradeoff that data is much slower to access, it is often used as a cold-storage tier, with working set data moved into a higher-performing primary storage to complete jobs more quickly. But Amazon S3 can also be used as primary storage, with many cloud analytics applications designed to be able to consume working set directly from object storage. Many users are OK with taking longer to get results, in exchange for the lower storage costs of $0.01-0.02 per GB per month.

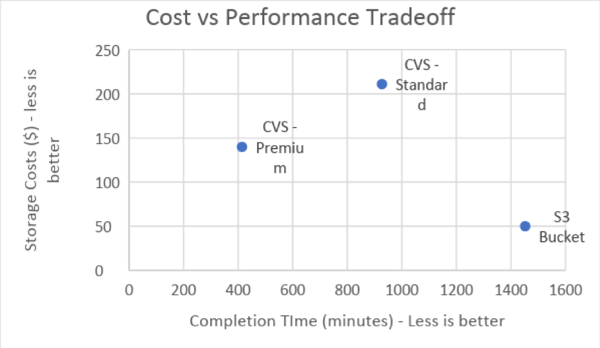

My colleague Chad Morgenstern recently conducted tests on a large analytics job in AWS. The Hadoop Spark cluster involved 74TB of data running across 420 concurrent container instances. It took about 24 hours to complete the job, but the cost to store that working set data in Amazon S3 at the Standard Access Tier was a modest $50 for the duration. Next, we tried running the same job using NetApp Cloud Volumes Service. Cloud Volumes Service is a durable, performant file-based data service available for AWS, and it offers three service levels – Standard, Premium, and Extreme, which provide increasing performance tiered with the storage costs. In this case, we tested the Standard and Premium levels; the Standard level provides up to 1400 MB/sec of throughput for this data set, while the Premium level provides over 3000 MB/sec, but at twice the cost - $0.10/GB/month for Standard vs $0.20/GB/month for premium.

Next, we tried running the same job using NetApp Cloud Volumes Service. Cloud Volumes Service is a durable, performant file-based data service available for AWS, and it offers three service levels – Standard, Premium, and Extreme, which provide increasing performance tiered with the storage costs. In this case, we tested the Standard and Premium levels; the Standard level provides up to 1400 MB/sec of throughput for this data set, while the Premium level provides over 3000 MB/sec, but at twice the cost - $0.10/GB/month for Standard vs $0.20/GB/month for premium.

Both service levels offered much better performance than using object storage, allowing you to complete the Hadoop Spark job 1.5 to 3.5X faster than using Amazon S3. The storage cost for the working set was $139 for Premium and $211 for Standard – the Premium actually ends up cheaper for the duration of the job, since it finishes more quickly. Nominally, Cloud Volumes Service gives you better performance, but at a 3-4X higher cost than Amazon S3. So, again it appears to be a typical cost vs performance tradeoff.

Additional Costs of Object Storage

But many who are enticed by the low monthly object storage costs don’t fully realize the additional costs when their data is actually being used, and how much that can add to their total costs. In particular, AWS charges an API cost to access the data when using it as your working set.

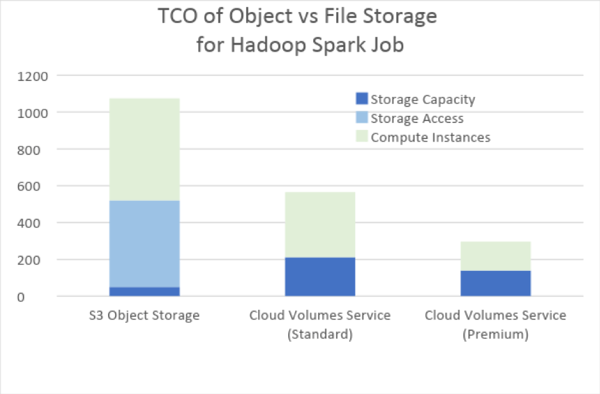

The API cost of $0.0004 per 1000 API GET requests seems like a miniscule cost. However, when you calculate this job was making over 13,000 GET requests PER SECOND, or over 1.1 BILLION requests over the 24-hour duration of the job, that translates to $470 in API access costs, which is nearly 10X the cost of the Amazon S3 object storage capacity itself.

In other words, the overwhelming cost when using object storage as primary working set storage may well be the access costs, not the storage capacity costs. When you factor in the total storage costs, then Amazon S3 actually costs $520 for this job, not $50. So not only is using object storage somewhat slow, but the overall TCO can be quite high.

Cloud Volumes Service includes high-performance access in the storage costs, the one price you see is what you pay. Not only is it much faster than object storage, but the total cost for this job ends up quite a bit cheaper as well – about 60-75% less expensive than using Amazon S3.

Compute: The REAL Hidden Cost of Slow Storage

Now, I’ve had more than one customer tell me already – “forget about saving a few bucks on storage costs, what really kills me is compute costs.” High compute costs factored in can ultimately make it cost-prohibitive to move some workloads to the cloud. Therein lies an even more insidious hidden cost of slower storage – you really need to consider the TCO of your entire application workload, particularly the impact of slow storage driving up your compute costs.

The great thing about this new era of transient containers is you only to keep your compute running for the duration of the job. In this analytics job, we used CentOS which qualified for per-second billing, and we needed a cluster of 15 C5.9xlarge EC2 instances to run the 74 Hadoop Spark container instances.

Since using Amazon S3 for storage took a lot longer to finish the job, the EC2 compute costs ended up at $555 for this job, whereas Cloud Volumes Service was able to feed the EC2 instances faster to complete the job more quickly – resulting in compute costs of only $158 to $354.

So, Cloud Volumes Service can further save additional TCO for your compute as well. The overall lowest cost option for this job actually ends up being the fastest - Cloud Volumes Service at the Premium tier. Although the Premium tier costs more in storage costs than the Standard tier, by completing the job much more quickly, the EC2 savings on compute leads to an overall cost about 25% less than using the Standard tier, and a whopping 70% cheaper than using Amazon S3!

Summary – Having Your Cake and Eating It Too with Cloud Volumes Service

Inexpensive object storage costs can make the cloud look very attractive for large analytics data sets, but you need to really examine all the costs that factor into TCO of your analytics workload. In particular, additional access costs for cheap storage, plus the additional compute costs of slow storage, can really overwhelm the apparent benefits of cheap storage.

NetApp Cloud Volumes Service provides a high-performance data option that not only provides quicker results, it also saves you money and enables you to move analytics to the cloud that might otherwise be cost-prohibitive. You no longer have to make a compromise between cost and performance – i.e., you really can have your cake and eat it too!

For a more detailed analysis and comparison of this Hadoop Spark test case, read the technical whitepaper HERE.