Subscribe to our blog

Thanks for subscribing to the blog.

September 8, 2021

Topics: DevOpsFile ServicesData MigrationData ProtectionAdvancedKubernetes Protection

We are pleased to be selected as a launch partner for EKS Anywhere. With EKS Anywhere, you can create and manage K8s clusters on-premises to run your containerized workloads. However, persistent storage and data management are key requirements and remain a challenge for stateful Kubernetes workloads. We developed NetApp® Astra™ Trident, an open-source Container Storage Interface (CSI) compliant dynamic storage orchestrator to address some of these challenges. It enables you to consume storage from NetApp's trusted and proven storage platforms. With Astra, NetApp brings considerable core competencies and expertise in data management to our customers, enabling their business-critical cloud-native workloads across a hybrid cloud.

Astra Trident has been instrumental in simplifying dynamic provisioning of volumes and other storage management services to stateful Kubernetes applications using NetApp storage. Astra Trident has an established track record and is trusted by industry leading enterprise customers across multiple verticals. Now, we provide NetApp Astra Trident’s storage management functionality for EKS-Anywhere.

This blog describes a basic NetApp Astra Trident install, setup and operation on an EKS-Anywhere cluster with Network File System (NFS) using Astra Trident's ontap-nas driver. You can customize the install and configure many additional features and operations than what is described in this blog. Astra Trident's documentation details many options for your installation, setup, as well information about more advanced features.

Is there any Special Preparation needed?

Not a lot, just deploy your EKS-Anywhere cluster as per the documentation and ensure kubectl access. However, be sure to include a ssh key in your vSphereCluster Custom Resource (CR) manifest (sshAuthorizedKey) to allow ssh access to your nodes. This is required to install, unmask (if necessary), start, and enable the nfs and/or iscsi drivers on your worker nodes. Be sure all your worker nodes have the appropriate drivers running before installing any applications. More information is located in Preparing your worker nodes section in the Astra Trident documentation.

Here is an example of commands on an EKS worker node to install and unmask the ubuntu nfs-common driver on an EKS-A worker node.

$ sudo apt-get update

$ sudo apt-get install -y nfs-common

$ sudo systemctl is-enabled nfs-common masked

$ sudo rm /lib/systemd/system/nfs-common.service

$ sudo systemctl daemon-reload

$ sudo systemctl start nfs-common

$ sudo systemctl status nfs-common

● nfs-common.service - LSB: NFS support files common to client and server

Loaded: loaded (/etc/init.d/nfs-common; generated)

Active: active (running) since Sun 2021-08-22 18:47:29 UTC; 7s ago

Docs: man:systemd-sysv-generator(8)

$ sudo systemctl enable nfs-common

How do I install Astra Trident on my EKS-Anywhere Cluster?

Ensure your EKS-Anywhere cluster is up and running and you have kubectl access and access from your cluster to your NetApp storage system.

tme@eksadmin:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

astra-eks-a-cluster-2lfwz Ready control-plane,master 4d v1.20.7-eks-1-20-2

astra-eks-a-cluster-5rrx8 Ready control-plane,master 4d v1.20.7-eks-1-20-2

astra-eks-a-cluster-md-0-566fc589b4-556dp Ready 4d v1.20.7-eks-1-20-2

astra-eks-a-cluster-md-0-566fc589b4-rp2q8 Ready 4d v1.20.7-eks-1-20-2

astra-eks-a-cluster-md-0-566fc589b4-x5gs9 Ready 4d v1.20.7-eks-1-20-2

astra-eks-a-cluster-wc6xg Ready control-plane,master 4d v1.20.7-eks-1-20-2

From a workstation that has kubectl access to your EKS-A workload cluster, download Astra Trident, install the orchestrator Custom Resource Definition (CRD), create tridents namespace and deploy the operator.

tme@eksadmin:~$ wget

https://github.com/NetApp/trident/releases/download/v21.04.1/trident-installer-21.04.1.tar.gz

tme@eksadmin:~$ tar -xvf trident-installer-21.04.1.tar.gz

tme@eksadmin:~$ cd trident-installer

tme@eksadmin:~/trident-installer$ kubectl create -f

deploy/crds/trident.netapp.io_tridentorchestrators_crd_post1.16.yaml

tme@eksadmin:~/trident-installer$ kubectl create ns trident

tme@eksadmin:~/trident-installer$ kubectl create -f deploy/bundle.yaml

Install the Trident pods via a CR.

tme@eksadmin:~/trident-installer$ kubectl create -f

deploy/crds/tridentorchestrator_cr.yaml

tridentorchestrator.trident.netapp.io/trident created

Now, you can see the Astra Trident pods are installed and running!

tme@eksadmin:~/trident-installer$ kubectl get pods -n trident

NAME READY STATUS RESTARTS AGE

trident-csi-85f67d7869-jj8wx 6/6 Running 0 3m46s

trident-csi-9tch6 2/2 Running 1 3m46s

trident-csi-cfmwb 2/2 Running 0 3m46s

trident-csi-dng9k 2/2 Running 0 3m46s

trident-csi-gg7zh 2/2 Running 0 3m46s

trident-csi-mf4cv 2/2 Running 1 3m46s

trident-csi-z6qc4 2/2 Running 0 3m46s

trident-operator-6c85cc75fd-2wwwd 1/1 Running 0 6m28s

More information on installing Trident can be found in Trident's documentation. You can also install via a Helm Chart if you are running Astra Trident 21.07.1 or higher.

OK, that was easy, how do I set up Astra Trident for dynamic volume provisioning, mounting, on-demand expansion, and snapshots?

A few more steps are required to get Astra Trident fully set up to start servicing your PersistentVolumeClaims (PVC) and all your persistence needs. This section of the blog goes through the following steps:

- Create at least one NetApp backend for Trident to create volumes

- Create at least one Kubernetes storage class with Trident as the provisioner

- Install the Kubernetes CSI snapshot controller

- Create at least one Kubernetes volumesnapshot class

Create at least one backend where Astra Trident will create NetApp volumes. Astra Trident's documentation gives more detail on how to create backends, and some sample files are also included in the trident-installer/sample-input directory downloaded with your trident bundle. A basic backend file with the ontap-nas driver is shown below.

backend-ontap-nas.json:

{

"version": 1,

"storageDriverName": "ontap-nas",

"backendName": "<name>",

"managementLIF": "<IP_of_mgmt_LIF>",

"dataLIF": "<IP_of_data_LIF>",

"svm": "<SVM_name>",

"username": "<name>",

"password": "<password>"

}

Replace <name>, <IP_of_mgmt_LIF>, <IP_of_data_LIF>, <SVM_name>, <name>, and <password> with appropriate values.

Apply the backend using tridentctl and verify it comes online so it is reachable by your cluster.

tme@eksadmin:~/trident-installer$ ./tridentctl create backend -f backend-ontap-nas.json -n trident

+------+----------------+--------------------------------------+--------+---------+

| NAME | STORAGE DRIVER | UUID | STATE | VOLUMES |

+------+----------------+--------------------------------------+--------+---------+

| OTS | ontap-nas | f13aa46b-e2e1-4062-8218-4dd90fe9cec8 | online | 0 |

+------+----------------+--------------------------------------+--------+---------+

Next, create at least one storage class that will be used by your PVCs. Be sure snapshots and allowVolumeExpansion are set to true if you would like to exercise those features. Additional features are available and described in the Trident documentation and additional examples are also provided in the trident-installer/sample-input directory. A simple example is shown below:

storage_class.yaml:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: ontap-gold

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: csi.trident.netapp.io

parameters:

backendType: "ontap-nas"

provisioningType: "thin"

snapshots: "true"

allowVolumeExpansion: trueApply the storage class to your EKS-A cluster.

tme@eksadmin:~$ kubectl apply -f storage_class.yaml

storageclass.storage.k8s.io/ontap-gold createdTo perform snapshots of your PVs, the CSI snapshot controller must be installed on the cluster. It can be installed in any namespace. Install the CSI snapshotter CRDs and snapshot RBAC and pod using the following commands:

tme@eksadmin:~$ kubectl apply -f

https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/release-3.0/client/config/crd/snapshot.storage.k8s.io_volumesnapshotclasses.yaml

tme@eksadmin:~$ kubectl apply -f

https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/release-3.0/client/config/crd/snapshot.storage.k8s.io_volumesnapshotcontents.yaml

tme@eksadmin:~$ kubectl apply -f

https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/release-3.0/client/config/crd/snapshot.storage.k8s.io_volumesnapshots.yaml

tme@eksadmin:~$ kubectl apply -f

https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/release-3.0/deploy/kubernetes/snapshot-controller/rbac-snapshot-controller.yaml

tme@eksadmin:~$ kubectl apply -f

https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/release-3.0/deploy/kubernetes/snapshot-controller/setup-snapshot-controller.yamlCheck to be sure the snapshot controller is installed and running:

tme@eksadmin:~$ kubectl get pods

NAME READY STATUS RESTARTS AGE

snapshot-controller-0 1/1 Running 0 26s Last but not least, create a VolumeSnapshotClass for the snapshots. More information can be found in the Kubernetes documentation and the Trident documentation. A sample snapshotclass yaml file is shown below.

snapshotclass.yaml

apiVersion: snapshot.storage.k8s.io/v1beta1

kind: VolumeSnapshotClass

metadata:

name: csi-snapclass

driver: csi.trident.netapp.io

deletionPolicy: Delete Apply the snapshotclass to your EKS-A workload cluster:

tme@eksadmin:~$ kubectl apply -f snapshotclass.yaml

volumesnapshotclass.snapshot.storage.k8s.io/csi-snapclass createdNow you are all set up! Time to start using Astra Trident!

This is great, how can I use some of the features?

Astra Trident will recognize any PVCs created in your EKS-A cluster and automatically create a PV as well as a backend volume on your NetApp backend storage. It will also automatically mount those volumes. It can also expand your volumes, create on demand snapshots, do PVC cloning and various other features. More information can be found here.

Just install your application with a PVC pointing to a Trident storageclass. Trident will recognize the PVC and create a PV for you with a volume on the backend as per the PVC request. For example, we will install a Jenkins manifest. We can see Trident immediately made a PV:

tme@eksadmin:~$ kubectl get pvc -n jenkins

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

jenkins-content Bound pvc-f13471f2-8d56-499d-9e71-426ddbf0984b 1Gi RWX ontap-gold 8s

tme@eksadmin:~$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

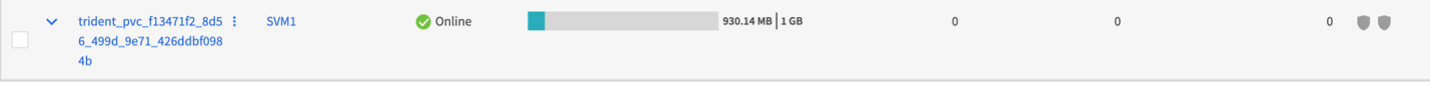

pvc-f13471f2-8d56-499d-9e71-426ddbf0984b 1Gi RWX Delete Bound jenkins/jenkins-content ontap-gold 10s We can see the volume on the ONTAP backend:

If we need more storage space, we can expand the size of the volume just by editing the PVC under the spec/resources requests storage section. Now we can see the volume size increased on the PVC, PV and the backend.

tme@eksadmin:~$ kubectl get pvc -n jenkins

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

jenkins-content Bound pvc-f13471f2-8d56-499d-9e71-426ddbf0984b 2Gi RWX ontap-gold 16m

tme@eksadmin:~$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-f13471f2-8d56-499d-9e71-426ddbf0984b 2Gi RWX Delete Bound jenkins/jenkins-content ontap-gold 16m

To create an on-demand snapshot, generate a VolumeSnapshot CR and apply it.

snap.yaml

apiVersion: snapshot.storage.k8s.io/v1beta1

kind: VolumeSnapshot

metadata:

name: pvc-jenkins-snap

spec:

volumeSnapshotClassName: csi-snapclass

source:

persistentVolumeClaimName: jenkins-content Applying the manifest creates the snapshot:

tme@eksadmin:~$ kubectl apply -f snap.yaml -n jenkins

volumesnapshot.snapshot.storage.k8s.io/pvc-jenkins-snap created

tme@eksadmin:~$ kubectl get volumesnapshots -n jenkins

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

pvc-jenkins-snap true jenkins-content 2Gi csi-snapclass snapcontent-6653864b-986b-46e3-a882-ad17cedb6604 10s 10s Of course, no sense in creating a snapshot if you cannot use the snapshot! We can then create a new PVC that uses the snapshot as its data source.

First, create a PVC manifest that uses the snapshot as its source:

pvc-from-snap.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-from-snap

spec:

accessModes:

- ReadWriteMany

storageClassName: ontap-gold

resources:

requests:

storage: 2Gi

dataSource:

name: pvc-jenkins-snap

kind: VolumeSnapshot

apiGroup: snapshot.storage.k8s.io

Apply the PVC:

tme@eksadmin:~$ kubectl apply -f pvc-from-snap.yaml -n jenkins

persistentvolumeclaim/pvc-from-snap created

tme@eksadmin:~$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-c9483b91-c4e3-42e5-a939-a70bd97dedd9 2Gi RWX Delete Bound jenkins/pvc-from-snap ontap-gold 7s

pvc-f13471f2-8d56-499d-9e71-426ddbf0984b 2Gi RWX Delete Bound jenkins/jenkins-content ontap-gold 30m

Next, we can create a new pod and use this new PVC from the new pod. The cloned data will be available to the new pod!

Now what?

Now, you are all set up to have enterprise storage management for all your applications running on an EKS-Anywhere cluster! You can provision, snapshot, expand, and clone persistent volumes, and use other features mentioned in the Astra Trident documentation. So, sit back, relax, and rest assured your persistence needs on EKS-anywhere are being fully managed.

Learn more about NetApp Astra!