Subscribe to our blog

Thanks for subscribing to the blog.

January 27, 2021

Topics: Cloud Tiering Data TieringAdvanced3 minute read

Organizations large and small use digital technology platforms to power their business operations. As the ways and means of this digital transformation evolve due to new technologies such as IoT, AI, and the yet-to-be-invented next big thing, one thing is guaranteed for sure: there will be much more data generated in the future. Some of this data will be transient in nature, but the vast majority of which is permanent and needs to be stored long term.

IDC estimates that more than 59ZB of data would be created, captured, copied and consumed during 2020 alone, aided by the abrupt increase due to the COVID-19 pandemic driving increased consumption of digital technologies. In-line with a similar Cisco analysis that predicted a massive buildup (4.5ZB) of world’s IP traffic by 2022 due to the increased use of IoT traffic, video and new users, a joint Seagate & IDC study predicts the world’s collective data storage requirements to grow from 45ZB back in 2019 to 175ZB by 2025.

How can you identify cost savings opportunities for this growing amount of data and actually save? Our newly created guidebook on Cloud Tiering’s use of Inactive Data Reporting shows how you can discover cold data in active, highly performant on-prem storage systems and tier it to less-expensive and more-scalable cloud object storage.

Find out the basics of how this works and where you can get the guidebook in this sneak peek.

What Is Cold Data?

All digital enterprise data tends to traverse through the typical data life cycle stages, which you can read about in detail here. As data traverses through these stages, its relevance and value are changed. Freshly created data is generally more important and is of higher value due to the up-to-date-ness of it and often this data is classified as “hot” or “warm.” Hot and warm data tend to be accessed frequently by various applications and enterprise users for frequent processing purposes.

Once such hot and warm data has been processed, analyzed, and served its purpose of providing meaningful intelligence, it is often required to store that data for a lengthy period of time, in an infrequently accessed cold state. There are a number of reasons for this. Many organizations, such as financial institutions and public sector organizations, have various regulatory and compliance requirements that force them to retain data anywhere from six months up to 25+ years. In some cases, data may even need to be stored indefinitely. While such requirements can vary across regions around the world, one good example is the clearly defined data retention requirements from the UK’s Financial Conduct Authority (FCA), which show the data retention requirements mandatory for every local and international financial organization that operate within the UK. There are also similar requirements from the USA’s Federal Reserve.

In addition to such regulatory requirements, many organizations will also be required to store and retain such cold data for lengthy periods of time due to certain operational requirements. Historical data, such as the data from a completed project, or historical recordings of share price movements can be used effectively for data mining purposes in the future. With technologies such as big data and AI, which work hand in hand with such historical data, future behaviors and business opportunities can be predicted. That makes such cold data valuable for every organization; retaining them on an electronic media that can be accessible on demand is a must have requirement for many organizations.

Another type of cold data with long retention requirements is the copies of data kept for security, backup, and disaster recovery (DR) purposes. Such data is often stored at an alternative location to their main copy, and this data typically belongs to the “Archival stage” of the enterprise data life cycle. In an increasingly digital world, data is the biggest asset that any organization has, and keeping proper backups of such data in a secure, offsite location is often an existential requirement for many in the time of a crisis such as accidental deletion or major security incidents such as ransomware attacks that render the primary hot / warm dataset inaccessible.

Cold data such as backup or DR copies as well as historical data retained for future use can constitute up to 70% of a typical enterprise organization’s storage requirements. Such data don’t require regular, frequent access by nature and therefore is better suited to be stored on high capacity, low-cost object storage platforms found on the cloud such as Amazon S3, Azure Blob, and Google Cloud Storage in order to significantly reduce the total cost of ownership over their total lifecycle.

How Cloud Tiering Service Help?

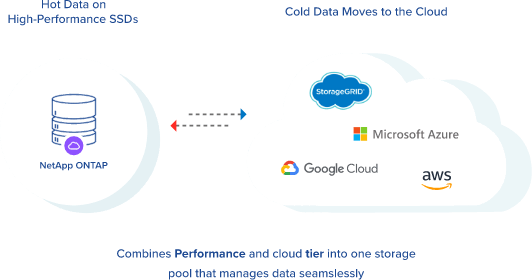

NetApp Cloud Tiering is a Software-as-a-Service (SaaS) offering available from NetApp that helps on-premises ONTAP systems benefit from the low-cost object storage solutions in the cloud by leveraging NetApp’s FabricPool technology. Cloud Tiering extends high performance flash tiers located on premises to the cloud by seamlessly moving cold data to high-capacity, durable, low-cost object storage tiers, with no impact to the front-end applications and users of that data.

With Cloud Tiering, active data (both hot and warm data) remains on the high-performance tiers in the data center to meet the performance needs of the application, while cold, inactive data is automatically identified and tiered off to an object storage platform, freeing up valuable capacity on the on-premises storage array. ONTAP data efficiencies such as deduplication and compression are also maintained within the cloud tier which ensures the cost savings are optimized on the object storage solution due to minimum storage consumption. Inactive Data Reporting is an essential part of the way Cloud Tiering identifies the data it will tier to the cloud.

Find Out More in the Complete Guidebook

Data is growing exponentially, but your data center costs shouldn’t have to grow along with it. To see a full walkthrough of the Inactive Data Reporting process and how Cloud Tiering can free up space in your on-prem data center storage system, sign up to download the full guidebook.