More about Cloud File Sharing

- File Sharing in the Cloud on GCP with Cloud Volumes ONTAP

- How to Set Up Multiprotocol NFS and SMB File Share Access

- Cloud File Storage: 4 Business Use Cases and Enterprise Solutions

- Cloud File Share High Availability Nightmares (and How Cloud Volumes ONTAP Can Help)

- File Sharing in the Cloud: Cloud Volumes ONTAP Customer Case Studies

- File and S3 Multiprotocol Access Now Available in BlueXP

- Secure File Sharing in the Cloud

- File Share Service Challenges in the Cloud

- How to Configure NFS Storage Using AWS Lambda and Cloud Volumes ONTAP

- AWS File Storage with AWS Lambda

- Cloud File Share: 7 Solutions for Business and Enterprise Use

- In-Flight Encryption in the Cloud for NFS and SMB Workloads

- Amazon S3 as a File System? How to Mount S3 as Drive for Cloud File Sharing

- SMB Mount in Ubuntu Linux with Azure File Storage

- Azure SMB: Accessing File Shares in the Cloud

- File Archiving and Backup with Cloud File Sharing Services

- Shared File Storage: Cloud Scalability and Agility

- Azure NAS: Why and How to Use NAS Storage in Azure

- File Caching: Unify Your Data with Talon Fast™ and Cloud Volumes ONTAP

- Azure Storage SMB File Sharing

- Enterprise Data Security for Cloud File Sharing with Cloud Volumes ONTAP

- Cloud-Based File Sharing: How to Enable SMB/CIFS and NFS File Services with Cloud Volumes ONTAP

- Cloud File Sharing Services: Open-Source Solutions

- Cloud File Sharing Services: Azure Files and Cloud Volumes ONTAP

Subscribe to our blog

Thanks for subscribing to the blog.

September 7, 2022

Topics: Cloud Volumes ONTAP File ServicesAWSMaster8 minute read

The main goal of going serverless with a platform such as AWS Lambda is to offload the burden of managing infrastructure to the cloud provider. This frees up developers, who don’t have to worry about infrastructure as they pump out code. However, there are still use cases with AWS Lambda where it will be required to leverage persistent storage, including in cloud file sharing architectures.

AWS Lambda users will need to find a solution for storage persistence, and NetApp Cloud Volumes ONTAP can help them do that. In this blog we’ll provide a worked example of how to configure access to persistent NFS volumes using Cloud Volumes ONTAP and AWS Lambda.

Jump down to the relevant sections using these links:

- AWS Lambda Basics

- How to Set Up Access to NFS Storage on Cloud Volumes ONTAP from AWS Lambda

- Conclusion

AWS Lambda Basics

The value in AWS Lambda lies in the speed it gives to developers. Teams can quickly release new code into production using Lambda while any of the nuts and bolts of infrastructure are transparent. This makes it possible for business priorities to remain the sole focus of any release. Faster time to market (TTM) directly translates to increased profits, making Lambda essential to agile delivery.

AWS Lambda functions are triggered automatically and enacted by AWS once specific predefined criteria exist or events take place. Part of the flexibility of the service is the wide range of programming languages that can be used to develop Lambda functions—users can rely on Go, Ruby, JavaScript, Java/C#, and Python.

Using Amazon Step Functions, it is possible to orchestrate multiple Lambda functions to work together and leverage other services and products from AWS. This enables users to create extremely complex automated workflows.

In a serverless architecture, events such as a new incoming web request trigger the execution of a Lambda function or a Step Functions workflow that is by definition ephemeral. The resources that are executed, including its local data storage, only exist for the length of time it takes the Lambda function (or Step Functions workflow) to run. However, in use cases that require file sharing across functions during the execution, or storing data after (or between) executions, data needs to have a way to persist. To meet this demand, Lambda functions can be developed to connect to persistent storage services on AWS.

For more on the persistent file storage options that can be leveraged by AWS Lambda, check out this blog on the different AWS file storage options for use with AWS Lambda.

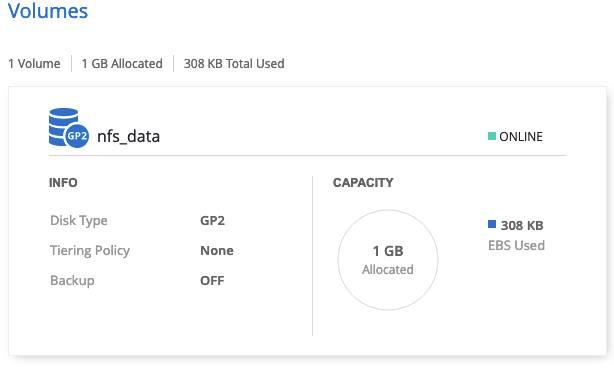

One of those options is NetApp Cloud Volumes ONTAP. Cloud Volumes ONTAP is an enterprise data management solution that provides consistency across multicloud and hybrid environments. On AWS, it leverages block-based AWS EBS disk storage, which can serve as persistent NFS, SMB, iSCSI storage for AWS Lambda functions.

In the rest of this post, we’ll take a step-by-step walkthrough on how to connect Cloud Volumes ONTAP and AWS Lambda so that Lambda functions can leverage NFS volumes via Cloud Volumes ONTAP.

How to Set Up Access to NFS Storage on Cloud Volumes ONTAP from AWS Lambda

In this section, we will provide a complete practical example of accessing NFS files hosted in Cloud Volumes ONTAP from AWS Lambda.

Configuring Cloud Volumes ONTAP for AWS Lambda

- The first step in the process involves accessing NetApp BlueXP Console, which acts as the central web-based platform from which Cloud Volumes ONTAP deployments can be created and managed. You can do this by signing up for a NetApp BlueXP account and starting a free 30-day trial of Cloud Volumes ONTAP.

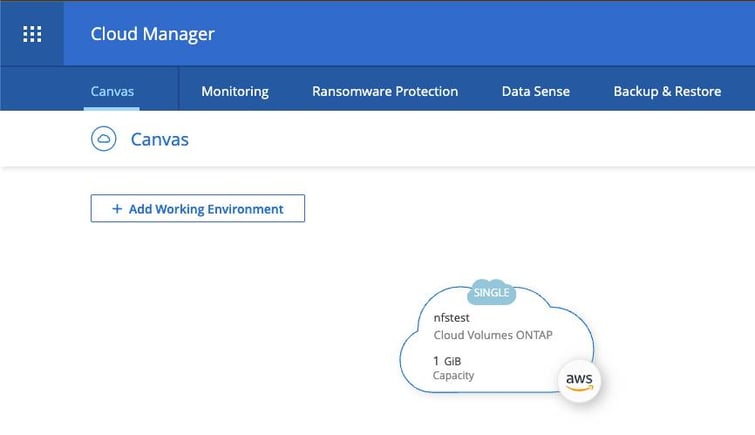

- After accessing BlueXP Console, we can now proceed to create a new instance of Cloud Volumes ONTAP. From the Canvas tab, the starting point of any BlueXP Console session, users can configure the instance to our particular specifications, deploy an initial volume, and then share out this volume over NFS.

- In the Canvas tab you’ll be able to see all of the working environments you currently control. From here users inspect the details of any of these deployments, manage volumes and file shares, and perform the majority of day-to-day administrative operations.

This graphical interface will update to show that our new Cloud Volumes ONTAP installation is ready for use after initialization is complete.

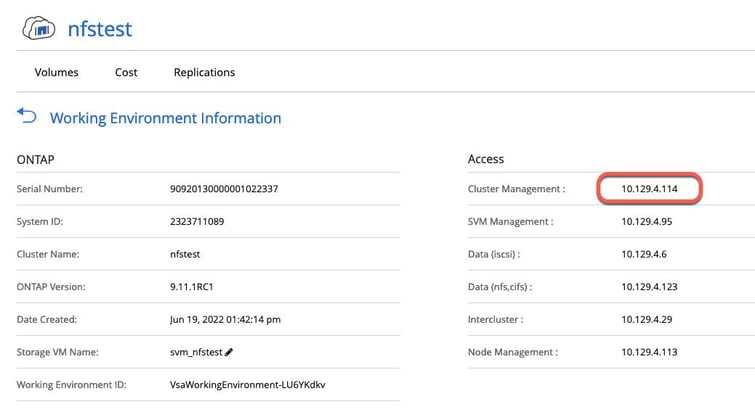

- In order for AWS Lambda to make a successful NFS connection to Cloud Volumes ONTAP, we must make an advanced configuration change that is only possible through an SSH connection to the Cloud Volumes ONTAP instance itself. We can find the IP address to connect to by inspecting the details of our instance in BlueXP Console.

The IP address for our instance is highlighted in the screenshot below.

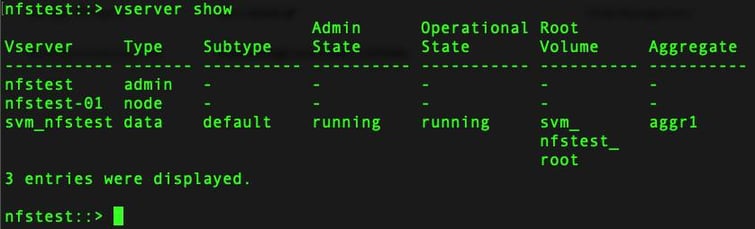

- There is a configuration option that must be changed that relates to allowing access to NFS shares over non-reserved client ports, i.e. from port numbers 1024 or higher. This is required as our AWS Lambda function will not be executed as root, and therefore will not be able to initiate a connection from a reserved port.

The following shows the commands necessary to perform this change, as per the NetApp documentation:

- We’re now ready to connect to our NFS share. We can again use BlueXP Console to find the mount point for the share, which can be found within the Volumes section of the Cloud Volumes ONTAP instance.

- Hovering over the volume and selecting Mount Command will provide us the connection details we need to use from AWS Lambda.

Creating the AWS Lambda Function

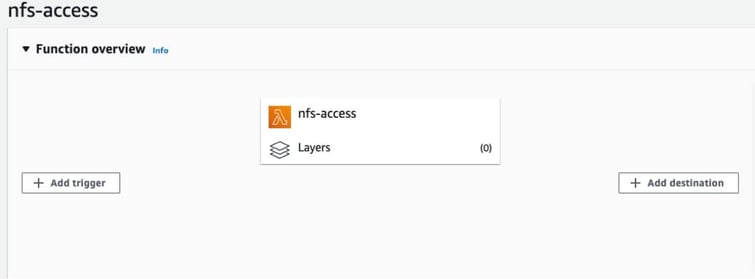

In this step we will create the AWS Lambda function that will connect to the NFS volume on Cloud Volumes ONTAP. The AWS Lambda function will be created using the AWS Console.

As this function will need access to resources within our VPC, i.e. Cloud Volumes ONTAP, we will need to provide the IAM role used to execute the function with AWSLambdaVPCAccessExecutionRole permissions.

After the function has been created, we must also provide our network configuration details under the VPC section of AWS Lambda function’s definition page.

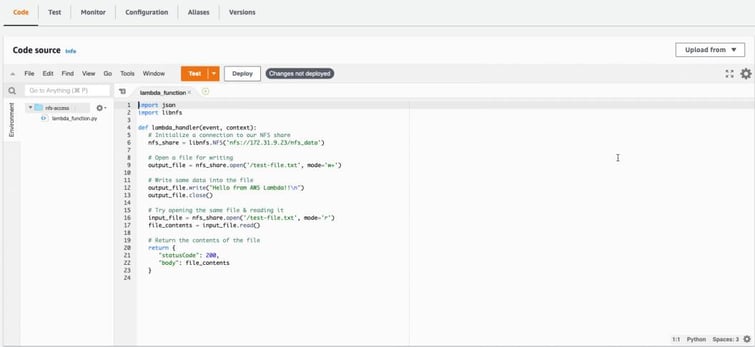

We can now move to actually implementing our function. For this example, we will use Python, however, the same technique will translate to most other languages.

- Using an NFS client library called libnfs , we will directly mount our NFS share from within the function code, and then proceed to perform file reads and writes.

The following shows the complete AWS Lambda function definition:

import json

import libnfs

def lambda_handler(event, context):

# Initialize a connection to our NFS share

nfs_share = libnfs.NFS('nfs://172.31.9.23/nfs_data ')

# Open a file for writing

output_file = nfs_share.open('/test-file.txt' , mode='w+')

# Write some data into the file

output_file.write("Hello from AWS Lambda!!\n")

output_file.close()

# Try opening the same file & reading it

input_file = nfs_share.open('/test-file.txt' , mode='r')

file_contents = input_file.read()

# Return the contents of the file

return {

"statusCode": 200,

"body": file_contents

}- To successfully deploy the function, we will need to package up our external dependencies along with the code, including the native libnfs.so.13 dependency used by the libnfs python library. This can be achieved by creating a zip archive containing all relevant files in a pre-defined directory structure, as described in the AWS documentation:

$ ls

lambda_function.py

$ mkdir package

$ pip install --target ./package libnfs

Collecting libnfs

Using cached libnfs-1.0.post4.tar.gz (48 kB)

Installing collected packages: libnfs

Running setup.py install for libnfs ... done

Successfully installed libnfs-1.0.post4

$ mkdir package/lib

$ cp /usr/lib64/libnfs.so.13.0.0 package/lib/libnfs.so.13

$ cd package

$ zip -r9 ../function.zip .

adding: libnfs/ (stored 0%)

adding: libnfs/_libnfs.cpython-38-x86_64-linux-gnu.so (deflated 73%)

adding: libnfs/__init__.py (deflated 74%)

adding: libnfs/libnfs.py (deflated 86%)

adding: libnfs/__pycache__/ (stored 0%)

adding: libnfs/__pycache__/libnfs.cpython-38.pyc (deflated 73%)

adding: libnfs/__pycache__/__init__.cpython-38.pyc (deflated 56%)

adding: lib/ (stored 0%)

adding: lib/libnfs.so.13 (deflated 67%)

$ cd ..

$ zip -g function.zip lambda_function.py

adding: lambda_function.py (deflated 35%)

$ aws lambda update-function-code --function-name nfs-access --zip-file fileb://function.zip

4iYqp+d2Bzvrfv2Pqxr17gJByEyGYufyP72IuTNzvRw= 298335 arn:aws:lambda:XXXXXXX:XXXXXXXXXX:function:nfs-access nfs-access lambda_function.lambda_handler 2020-07-02T09:32:28.193+0000 Successful 128 f0ba0358-2f5d-4cb4-9e5a-333f2580a4f8 arn:aws:iam::XXXXXXXXXX:role/NetAppCVOLambda python3.8 Active 3 $LATEST

TRACINGCONFIG PassThrough

VPCCONFIG vpc-2fdfb248

SECURITYGROUPIDS sg-0a065573

SUBNETIDS subnet-fddc8ab4

SUBNETIDS subnet-e92ec9b0

SUBNETIDS subnet-ac2908cbRunning the AWS Lambda Function

We can now try executing the AWS Lambda function from within the AWS Console. As we can see from the screenshot below, the function is able to successfully connect to the NFS share hosted on Cloud Volumes ONTAP and read and write files as required. We can also mount this share normally from a Linux host to verify that the files have in fact been created as we would expect.

Conclusion

AWS Lambda functions are able to integrate with various forms of AWS file storage, however, as we have demonstrated in this article, we can also use them to successfully access NFS shares hosted on Cloud Volumes ONTAP. This is achieved with minimal custom configuration and—due to the widespread availability of NFS client library implementations—should be compatible with most of the languages supported by AWS Lambda.