More about Google Cloud Database

- Google Cloud Dataflow: The Basics and 4 Critical Best Practices

- Should You Still Be Using Google Cloud Datastore?

- Google Cloud PostgreSQL: Managed or Self-Managed?

- Google Cloud Data Lake: 4 Phases of the Data Lake Lifecycle

- Google Cloud NoSQL: Firestore, Datastore, and Bigtable

- Google Cloud Big Data: Building Your Big Data Architecture on GCP

- Google Cloud Database Services Explained: How to Choose the Right Service for Your Workloads

- Google Cloud MySQL: MySQL as a Service vs. Self Managed in the Cloud

- Understanding Google Cloud High Availability

- 8 Types of Google Cloud Analytics: How to Choose?

- Cloud Firestore: An In-Depth Look

- Google Cloud BigQuery: How and When to Use Google Cloud BigQuery to Store Your Data

- Oracle on Google Cloud: Two Deployment Options

- SQL Server on Google Cloud: Two Deployment Options

- Google Cloud SQL: MySQL, Postgres and MS SQL on Google Cloud

Subscribe to our blog

Thanks for subscribing to the blog.

February 28, 2021

Topics: Cloud Volumes ONTAP DatabaseGoogle CloudElementary8 minute readAnalytics

What is Google Cloud Big Data?

Big data systems store and process massive amounts of data. It is natural to host a big data infrastructure in the cloud, because it provides unlimited data storage and easy options for highly parallelized big data processing and analysis.

The Google Cloud Platform provides multiple services that support big data storage and analysis. Possibly the most important is BigQuery, a high performance SQL-compatible engine that can perform analysis on very large data volumes in seconds. GCP provides several other services, including Dataflow, Dataproc and Data Fusion, to help you create a complete cloud-based big data infrastructure.

This is part of our series of articles on Google Cloud database services.

In this article, you will learn:

- Google Big Data Services

- Architecture for Large Scale Big Data Processing on Google Cloud

- GCP Big Data Best Practices

- Google Cloud Big Data Q&A

- Google Cloud Big Data with NetApp Cloud Volumes ONTAP

Google Big Data Services

GCP offers a wide variety of big data services you can use to manage and analyze your data, including:

Google Cloud BigQuery

BigQuery lets you store and query datasets holding massive amounts of data. The service uses a table structure, supports SQL, and integrates seamlessly with all GCP services. You can use BigQuery for both batch processing and streaming. This service is ideal for offline analytics and interactive querying.

Google Cloud Dataflow

Dataflow offers serverless batch and stream processing. You can create your own management and analysis pipelines, and Dataflow will automatically manage your resources. The service can integrate with GCP services like BigQuery and third-party solutions like Apache Spark.

Google Cloud Dataproc

Dataproc lets you integrate your open source stack and streamline your process with automation. This is a fully managed service that can help you query and stream your data, using resources like Apache Hadoop in the GCP cloud. You can integrate Dataproc with other GCP services like Bigtable.

Google Cloud Pub/Sub

Pub/Sub is an asynchronous messaging service that manages the communication between different applications. Pub/Sub is typically used for stream analytics pipelines. You can integrate Pub/Sub with systems on or off GCP, and perform general event data ingestion and actions related to distribution patterns.

Google Cloud Composer

Composer is a fully-managed cloud-based workflow orchestration service based on Apache Airflow. You can use Composer to manage data processing across several platforms and create your own hybrid environment. Composer lets you define the process using Python. The service then automates processing jobs, like ETL.

Google Cloud Data Fusion

Data Fusion is a fully-managed data integration service that enables stakeholders of various skill levels to prepare, transfer, and transform data. Data Fusion lets you create code-free ETL/ELT data pipelines using a point-and-click visual interface. Data Fusion is an open source project that provides the portability needed to work with hybrid and multicloud integrations.

Google Cloud Bigtable

Bigtable is a fully-managed NoSQL database service built to provide high performance for big data workloads. Bigtable runs on a low-latency storage stack, supports the open-source HBase API, and is available globally. The service is ideal for time-series, financial, marketing, graph data, and IoT. It powers core Google services, including Analytics, Search, Gmail, and Maps.

Google Cloud Data Catalog

Data Catalog offers data discovery capabilities you can use to capture business and technical metadata. To easily locate data assets, you can use schematized tags and build a customized catalog. To protect your data, the service uses access-level controls. To classify sensitive information, the service integrates with Google Cloud Data Loss Prevention.

Related content: read our guide to Google Cloud Analytics

Architecture for Large Scale Big Data Processing on Google Cloud

Google provides a reference architecture for large-scale analytics on Google Cloud, with more than 100,000 events per second or over 100 MB streamed per second. The architecture is based on Google BigQuery.

Google recommends building a big data architecture with hot paths and cold paths. A hot path is a data stream that requires near-real-time processing, while a cold path is a data stream that can be processed after a short delay.

This has several advantages, including:

- Ability to store logs for all events without exceeding quotas

- Reduced costs, because only some events need to be handled as streaming inserts (which are more expensive)

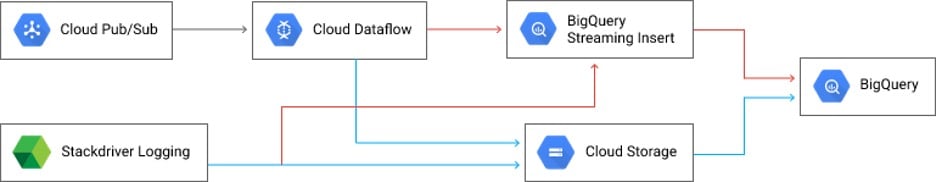

The following diagram illustrates the architecture.

Source: Google Cloud

Data originates from two possible sources—analytics events published to Cloud Pub/Sub, and logs from Google Stackdriver Logging. Data is divided into two paths:

- The hot path (red arrows) feeds into BigQuery using a streaming insert, to enable continuous data flow

- The cold path (blue arrows) feeds into Google Cloud Storage and from there, loaded in batches to BigQuery.

GCP Big Data Best Practices

Here are a few best practices that will help you make more of key Google Cloud big data services, such as Cloud Pub/Sub and Google BigQuery.

Data Ingestion and Collection

Ingesting data is a commonly overlooked part of big data projects. There are several options for ingesting data on Google Cloud:

- Using APIs on the data provider—pull data from APIs at scale using Compute Engine instances (virtual machines) or Kubernetes

- Real time streaming—best with Cloud Pub/Sub

- Large volume of data on-premises—most suitable for Google transfer appliance or GCP Online Transfer, depending on volume

- Large volume of data on other cloud providers—use Cloud Storage Transfer Service

Streaming Insert

If you need to stream and process data in near-real time, you’ll need to use streaming inserts. A streaming insert writes data to BigQuery and queries it without requiring a load job, which can incur a delay. You can perform a streaming insert on a BigQuery table using the Cloud SDK or Google Dataflow.

Note that it takes a few seconds for streaming data to become available for querying. After data is ingested using streaming insert, it takes up to 90 minutes for it to be available for operations like copy and export.

Use Nested Tables

You can nest records within tables to create efficiencies in Google BigQuery. For example, if you are processing invoices, the individual lines inside the invoice can be stored as an inner table. The outer table can contain data about the invoice as a whole (for example, the total invoice amount).

This way, if you only need to process data about invoices, and not individual invoice lines, you can run a query only on the outer table to save costs and improve performance. Google only accessed items in the inner table when the query explicitly refers to them.

Big Data Resource Management

In many big data projects, you will need to grant access to certain resources to members of your team, other teams, partners or customers. Google Cloud Platform uses the concept of “resource containers”. A container is a grouping of GCP resources that can be dedicated to a specific organization or project.

It is best to define a project for each big data model or dataset. Bring all the relevant resources, including storage, compute, and analytics or machine learning components, into the project container. This will allow you to more easily manage permissions, billing and security.

Google Cloud Big Data Q&A

How Does Google BigQuery Work?

BigQuery runs on a serverless architecture that separates storage and compute and lets you scale each resource independently, on demand. The service lets you easily analyze data using Standard SQL.

When using BigQuery, you can run compute resources as needed and significantly cut down on overall costs. You also do not need to perform database operations or system engineering tasks, because BigQuery manages this layer.

What is BigQuery BI Engine?

BI Engine performs fast in-memory analyses stored in BigQuery. BI Engine offers sub-second query response time and with high concurrency.

You can integrate BI Engine with tools like Google Data Studio and accelerate your data exploration and analysis jobs. Once integrated, you can use Data Studio to create interactive dashboards and reports without compromising scale, performance, or security.

What is Google Cloud Data QnA?

Data QnA is a natural language interface designed for running analytics jobs on BigQuery data. This service lets you get answers by running natural language queries. This means any stakeholder can get answers without having to first go through a skilled BI professional. Data QnA is currently running in private alpha.

Google Cloud Big Data with NetApp Cloud Volumes ONTAP

NetApp Cloud Volumes ONTAP, the leading enterprise-grade storage management solution, delivers secure, proven storage management services on AWS, Azure and Google Cloud. Cloud Volumes ONTAP supports up to a capacity of 368TB, and supports various use cases such as file services, databases, DevOps or any other enterprise workload, with a strong set of features including high availability, data protection, storage efficiencies, Kubernetes integration, and more.

Cloud Volumes ONTAP supports advanced features for managing SAN storage in the cloud, catering for NoSQL database systems, as well as NFS shares that can be accessed directly from cloud big data analytics clusters.

In particular, Cloud Volumes ONTAP provides storage efficiency features, including thin provisioning, data compression, and deduplication, reducing the storage footprint and costs by up to 70%.