More about Google Cloud Storage

- Google Cloud Persistent Disk Explainer: Disk Types, Common Functions, and Some Little-Known Tricks

- How to Mount Google Cloud Storage as a Drive for Cloud File Sharing

- How to Deploy Cloud Volumes ONTAP in a Secure GCP Environment

- A Look Under the Hood at Cloud Volumes ONTAP High Availability for GCP

- Google Cloud Containers: Top 3 Options for Containers on GCP

- How to Use Google Filestore with Microservices

- Google Cloud & Microservices: How to Use Google Cloud Storage with Microservices

- High Availability Architecture on GCP with Cloud Volumes ONTAP

- GCP Persistent Disk Deep Dive: Tips, and Tricks

- How to Use the gsutil Command-Line Tool for Google Cloud Storage

- Storage Options in Google Cloud: Block, Network File, and Object Storage

- Provisioned IOPS for Google Cloud Persistent Disk

- How to Use Multiple Persistent Disks with the Same Google Cloud Compute Engine Instance

- Google Cloud Website Hosting with Google Cloud Storage

- Google Cloud Persistent Disk: How to Create a Google Cloud Virtual Image

- Google Cloud Storage Encryption: Key Management in Google Cloud

- How To Resize a Google Cloud Persistent Disk Attached to a Linux Instance

- How to Add and Manage Lifecycle Rules in Google Cloud Storage Buckets

- How to Switch Between Classes in Google Storage Service

- Cloud File Sharing Services: Google Cloud File Storage

Subscribe to our blog

Thanks for subscribing to the blog.

November 29, 2021

Topics: Cloud Volumes ONTAP High AvailabilityGoogle CloudMaster11 minute read

Uptime is non-negotiable for any application whether it’s hosted on premises or in the cloud. Highly available storage plays an important role in that regard: if your data in Google Cloud storage is inaccessible, it’s going to bring down the rest of the application.

Cloud Volumes ONTAP’s high availability (HA) configuration for Google Cloud ensures that your data layer is always accessible without compromising on storage efficiencies or performance.

In this blog we will deep dive into the different components of Cloud Volumes ONTAP HA in Google Cloud to see what enables it to provide highly available storage for applications in Google Cloud.

Click the links below to jump down to the section on:

- Highly Available Storage with Cloud Volumes ONTAP in GCP

- Cloud Volumes ONTAP High Availability Components in GCP

- Conclusion

Highly Available Storage with Cloud Volumes ONTAP in GCP

Google Cloud supports deployment of resources across multiple geographical regions and multiple zones within a region. For applications hosted on Google Cloud virtual machines, customers can choose between zonal and regional persistent disks for the storage layer. While zonal persistent disks (ZPD) replicate data within a zone, it doesn’t provide protection from failures if that zone fails. Regional persistent disks (RPD) on the other hand synchronously replicates data between two zones in the same Google Cloud region. In this case, if the primary zone fails, your data is still available since it’s replicated to another zone.

Customers can leverage RPD to design highly available architecture for applications in Google Cloud. If one zone fails, the disk can be attached to another VM in the secondary zone to access the data. However, there are additional recovery point objective (RPO), recovery time objective (RTO), and performance considerations while choosing the storage layer.

For instance, zonal persistent disks provide higher read/write IOPs when compared to regional persistent disks. Also, we need to consider the time taken for the RPDs to be attached to a VM in the secondary zone and for the application to be online, which could impact your RTO.

Learn more about the High Availability Infrastructure Options on Google Cloud Platform.

NetApp Cloud Volumes ONTAP provides an alternative solution to deliver a highly available storage layer across different zones in a region. Cloud Volumes ONTAP leverages native Google cloud components like zonal persistent disks, VPC, load balancers etc. in the backend to deliver an augment HA solution for your workloads.

NetApp Cloud Manager also automates the deployment and maintenance of all the storage components to make managing highly available storage solutions easy, too, so you don’t just get an RPO of 0 and RTO of <60 seconds, but failover and failback that is completely automated for you.

How does it work? Let’s take a look under the hood at each component and see.

Cloud Volumes ONTAP High Availability Components in GCP

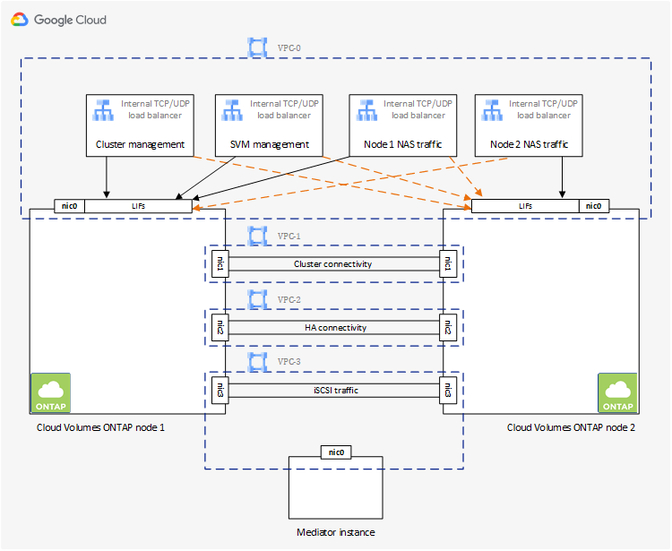

Cloud Volumes ONTAP delivers high availability through two ONTAP nodes, deployed either in a single zone or in multiple zones with synchronous data replication between the nodes. The high level conceptual architecture of a highly available Cloud Volumes ONTAP deployment is shown below.

The ONTAP Nodes

The HA deployment consists of two ONTAP nodes that use powerful n1-standard or n2-standard machine types available in Google Cloud. The deployment supports zonal persistent disks that are grouped into aggregates and attached to the nodes to provision Cloud Volumes ONTAP volumes. The same number of disks are allocated to both nodes when you create a volume, thereby creating a mirrored aggregate architecture.

Data is synchronously replicated between the two Cloud Volumes ONTAP nodes to ensure availability in the event of a failure. The synchronous mirroring ensures that the data is committed in both source and destination volumes, thereby ensuring consistency and zero data-loss. If one of the nodes goes down, the other node will continue to serve data. Once the node is back online Cloud Volumes ONTAP initiates a resync and storage give back process, all of which happens automatically without user intervention.

Mediator Instance

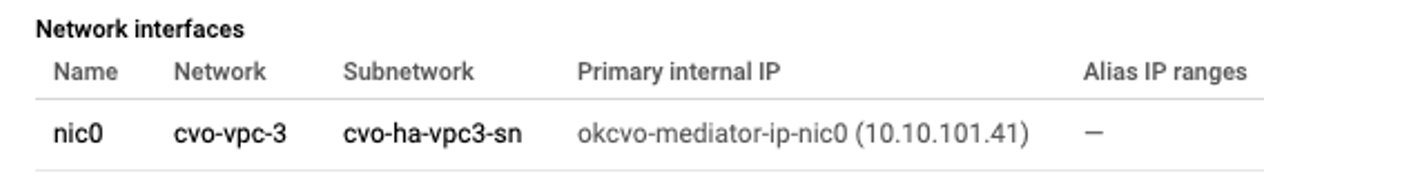

An f1-micro instance acts as the mediator to enable communication between the ONTAP nodes. It uses two 10 GB standard persistent disks. The mediator plays a key role in enabling smooth storage handover and giveback process to handle failures. The mediator also controls the cluster mailbox disks, which store detailed information about the cluster state, key for ensuring consistency during failover.

Zone Selection

Cloud Volumes ONTAP can be deployed in a single GCP zone or multiple zones. In single-zone deployments, the architecture eliminates single points of failure within a zone using a spread placement approach. This extends the failure domain between two possible data serving VMs each with their own backing storage which protects against VM and persistent disk level failures. It also reduces write latency between ONTAP nodes to a degree, which can benefit workloads that are extremely latency sensitive.

Multi-zone deployment is spread across three zones, where the ONTAP nodes and mediator instances are deployed in different zones. It has a minimal write performance tradeoff when compared to single zone deployments, but is the recommended architecture as it protects against zonal, VM, and persistent disk failures.

Network Configuration

VPC: HA deployment of Cloud Volumes ONTAP requires four VPCs and a private subnet in each VPC. The subnets in the four VPCs should be provisioned with non-overlapping CIDR ranges. The four VPCs are used for the following purposes (as shown in the conceptual diagram above)

- VPC 0 enables inbound communication to data and Cloud Volumes ONTAP nodes

- VPC 1 provides cluster connectivity between Cloud Volumes ONTAP nodes

- VPC 2 allows for non-volatile ram (NVRAM) replication between nodes s

- VPC 3 is used for connectivity to the HA mediator instance and disk replication traffic for node rebuilds

VPCs 1, 2, and 3 should all be non-routable, private networks, but can alternatively be shared networks. VPC 0 is most commonly a shared network as this network is used for client data and management access.

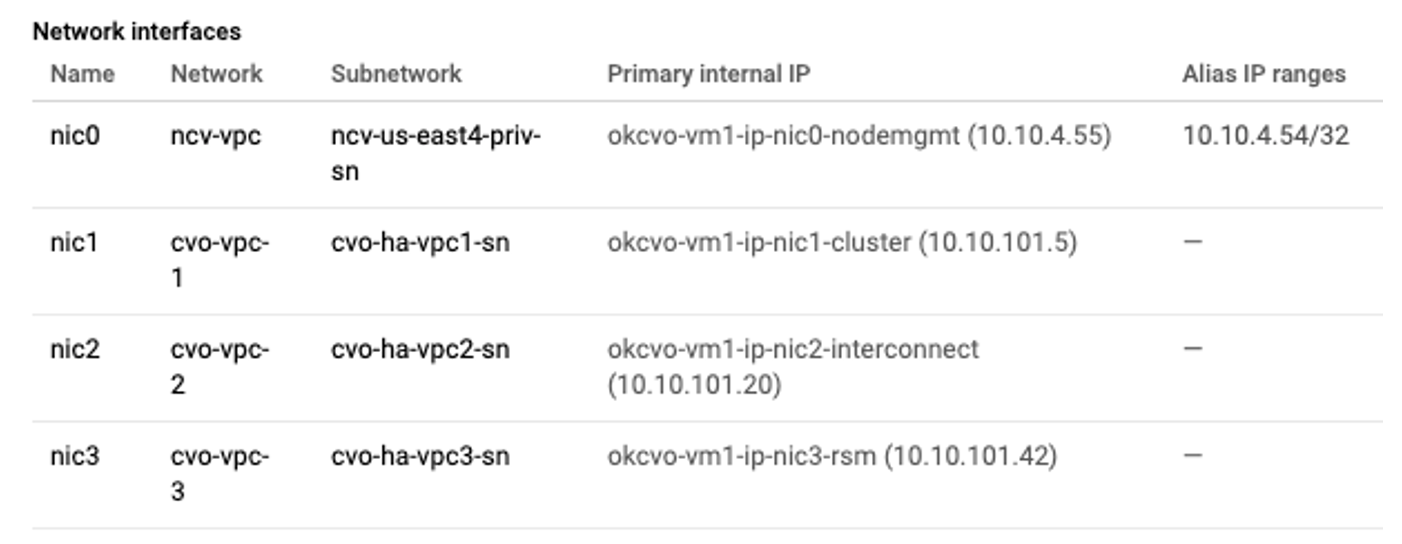

Let’s take a closer look at some ONTAP node network configuration options. A sample NIC configuration for ONTAP nodes and mediator instances shown below:

NIC configuration for ONTAP node 1 (GCE instance)

NIC configuration for ONTAP node 1 (GCE instance)

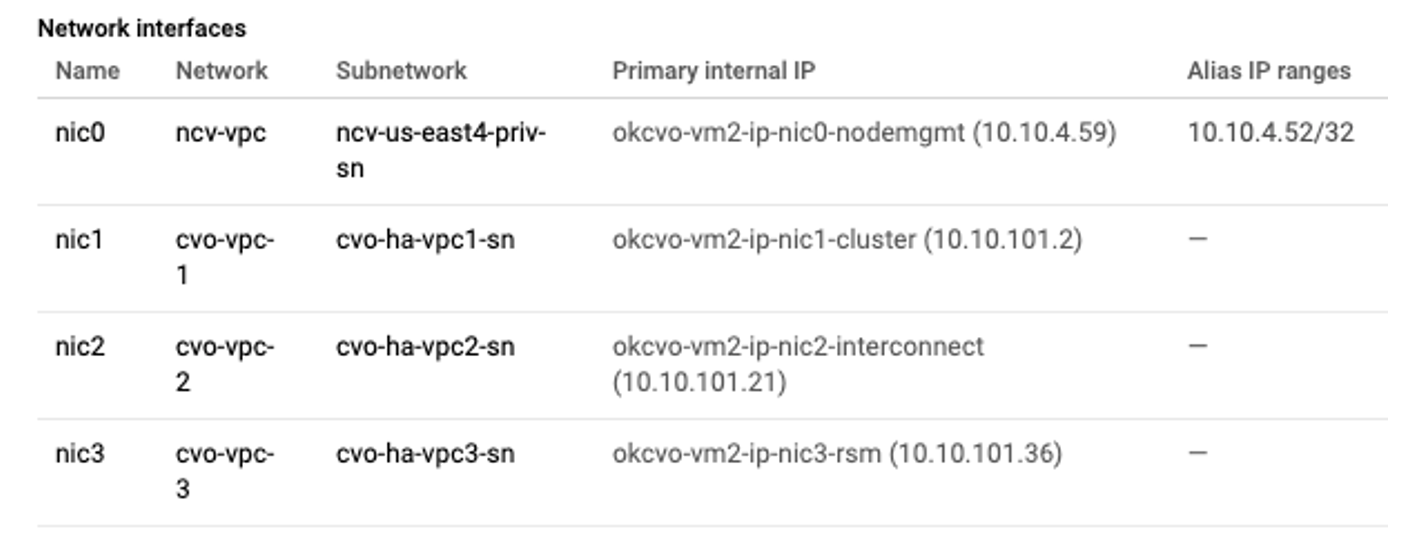

NIC configuration for ONTAP node 2 (GCE instance)

NIC configuration for ONTAP node 2 (GCE instance)

NIC configuration for mediator (GCE instance)

NIC configuration for mediator (GCE instance)

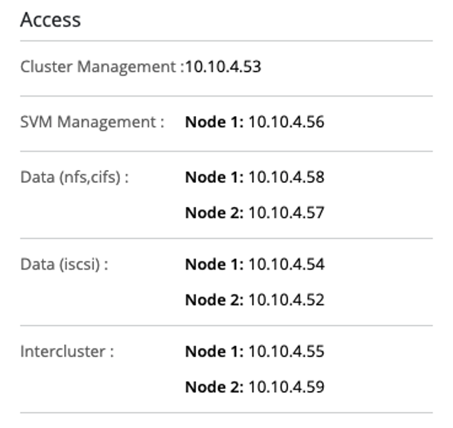

And here is a sample view of the configuration from Cloud Manager:

Data access information from Cloud Manager

Data access information from Cloud Manager

And this is a sample view of the default network configuration from ONTAP CLI:

Private Google Access: Two key Cloud Volumes ONTAP technologies support using Private Google Access to provide connectivity to Google Cloud Storage (GCS) without having to exit the Google network: data tiering and Cloud Backup.

Cloud Volumes ONTAP can reduce total cost of ownership (TCO) in many ways, but one of the simplest is to take less frequently used data and tier it from more-expensive persistent disk into lower-cost GCS. Cloud Volumes ONTAP can implement this seamlessly with data tiering. Cloud Volumes ONTAP can also back up all data from the system using NetApp Cloud Backup Service which stores full volume copies in GCS, protecting your data even further while maintaining all the ONTAP storage efficiencies.

To make use of these services, VPC-0, the Cloud Volumes ONTAP data-serving network, should have private Google access enabled.

Traffic flow: Each ONTAP node uses nic0 as a generic target for NAS and MGMT traffic. The users, clients, and applications connect through Google LoadBalancers with frontend IP addresses (for NAS and MGMT), which point to the primary IP of nic0 of the ONTAP nodes for their active path and another path to the secondary node for failover. The traffic for Cloud Volumes ONTAP features such as SnapMirror®, Cloud Backup, and access to iSCSI also flow through nic0, but through an alias IP address. These types of access do not use the load balancer because failover is handled by the client, such as iSCSI multipath.

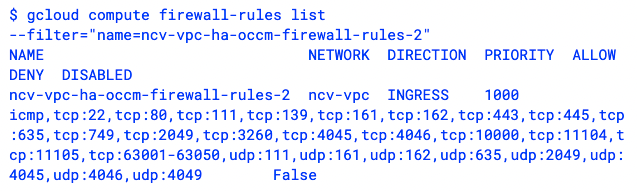

Firewall configuration: Cloud Volumes ONTAP HA configuration requires inbound and outbound firewall rules configured on VPC-0. In addition to the networks that need access to Cloud Volumes ONTAP, you should also add the IP addresses 35.191.0.0/16 and 130.211.0.0/22 associated with Google internal load balancers used in the architecture. These IP ranges are used for the load balancer health checks.

Inbound rules should be created to allow ICMP, HTTP(S), SSH protocols for cluster management purposes. Additional TCP ports should be opened for NFS, SMB / CIFS, iSCSI, SnapMirror data transfer etc. (refer sample configuration below for full set of ports). The Cloud Manager generated firewall for Cloud Volumes ONTAP allows all outbound TCP, UDP, and ICMP traffic from the instances. Customers also have the option to finetune the outbound rules to allow required traffic.

Cloud Volumes ONTAP creates predefined firewall rules that allows ingress communication over all protocols and ports for VPC-1, VPC-2, and VPC-3. This communication is required for enabling high availability between the ONTAP nodes.

Here is a sample of the firewall rules applied for ONTAP nodes 1 and 2. Note that VPC-0 is represented by ncv-vpc:

Cloud load balancers: As shown in the conceptual architecture, Cloud Volumes ONTAP HA configuration includes four internal TCP/UDP load balancers that are created by the Cloud Manager during the initial deployment. These load balancers are used for cluster management, storage VM (SVM) management, and for NAS traffic management to the two ONTAP nodes in case of active/active configurations where both nodes serve data concurrently.

Each of these load balancers are configured with a shared private IP address between their TCP and UDP backends with forwarding rules and health checks configured at ports 63001, 63002, and 63003. Each ONTAP node is given a GCP instance group which allows the load balancer to specify the primary node for traffic and the failover node on standby. If the load balancer health check does not respond within the specified time, traffic is forwarded to the secondary node and a failover is initiated.

Global access, if enabled in load balancers, allows VMs deployed on any region to access the services behind internal TCP/UDP load balancers. In Cloud Volumes ONTAP deployment, a cross-region mount of file shares could result in higher latencies and hence the global access is disabled by default. However, if there is a business requirement for that functionally, customers can enable global access.

Conclusion

In addition to high-availability Cloud Volumes ONTAP also has a host of proprietary NetApp features like storage efficiency, SnapMirror® replication for DR, malware protection through cloud based WORM storage, quick clone provisioning through FlexClone®, data protection thanks to NetApp Snapshot™ copies, and much more. It also provides a unified management interface through Cloud Manager that addresses data visibility challenges and automation, especially with hybrid and multicloud deployments.

Cloud Volumes ONTAP uses the native Google Cloud components to build a robust Multi Zone HA architecture that can protect your workloads from zonal level failures. It is the same configuration trusted by NetApp customers for many years for their on-premises workloads. It is also available in AWS and Azure in addition to Google Cloud. Thus, Cloud Volumes ONTAP provides an easy to use simple solution for storage high availability in Google Cloud.

-1.png?quality=high&width=492&name=image%20(1)-1.png)