Subscribe to our blog

Thanks for subscribing to the blog.

August 28, 2018

Topics: Database4 minute read

Are you thinking about how to increase the performance of your data in the cloud? Consider NetApp® Cloud Volumes Service, which is about more than just NFS or SMB. With Cloud Volumes Service, you can be confident that your data is durable, encrypted, highly available, and high-performing.

For details about how Cloud Volumes Service delivers data durability, strong encryption, and high availability, read our first blog post in this series. That post also gives you some compelling specifics about NetApp Cloud Volumes Service Snapshot™ technology.

Here, in our third blog post, we focus on Oracle performance. Oracle is especially well suited to be deployed with the NetApp Cloud Volumes Service. With Cloud Volumes Service, your data is protected by eights nines of durability and is accessible across all the Amazon Web Services (AWS) Availability Zones within a given region. And Oracle Direct NFS Client seems almost purpose-built for the AWS architecture. We will give you more information about that aspect later.

When you evaluate database workloads, you should always consider the impact of server memory. Latency from memory is orders of magnitude lower than from any disk query, so, ideally, queries find their data resident therein. What does this latency aspect mean for you? Storage latency matters, but memory hit percentage matters more. When your database administrators say that they need a certain latency level, you should keep that level in mind. And Cloud Volumes Service can help you meet or even exceed those expectations.

To back up our claim, we put NetApp Cloud Volumes Service and Oracle to the test.

We started with the knowledge that to meet load demands, Oracle Direct NFS Client spawns many network sessions to each NetApp cloud volume. The vast number of network sessions creates the potential for a significant amount of throughput for your database.

To test Oracle’s ability to generate load, we ran a series of proofs of concept across various Amazon Elastic Compute Cloud (Amazon EC2) instance types, with various workload mixtures and volume counts. The SLOB 2 workload generator drove the workload against a single Oracle 12.2c database.

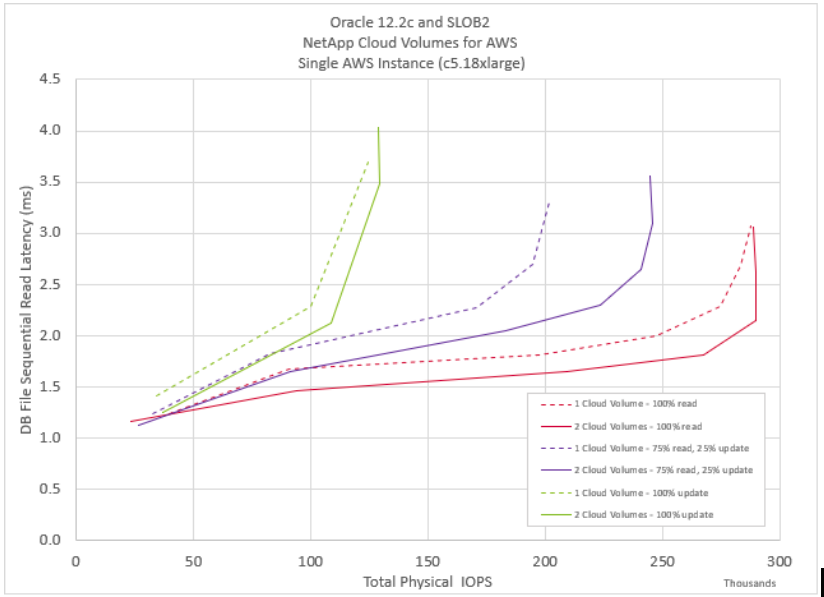

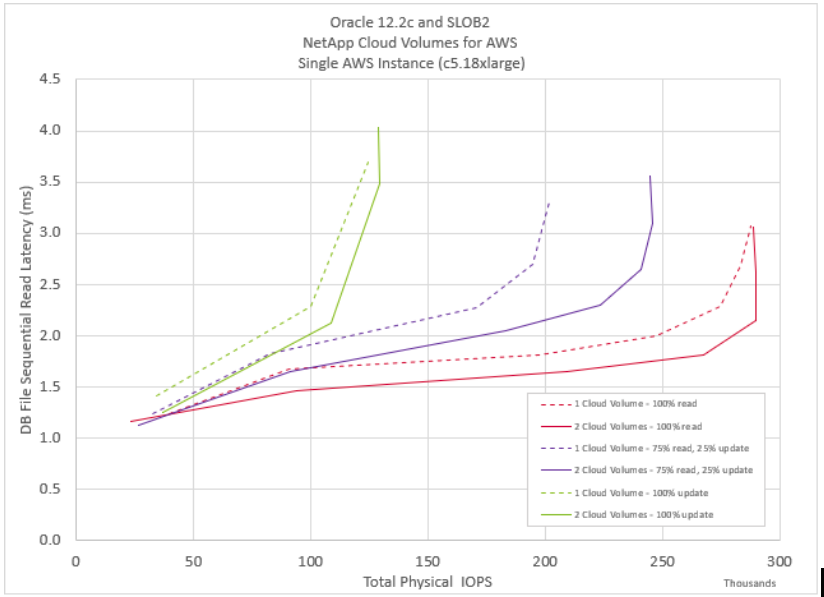

Testing showed that any instance size is fine; you can run an instance size according to your needs. Oracle and NetApp Cloud Volumes Service can consume the resources of even the largest instance. To illustrate this point, the following graph focuses on the c5.18xlarge instance type.

AWS imposes an architectural egress limit of 5Gbps for all I/O that leaves the Virtual Private Cloud (VPC). This limit defines the lower threshold in the graph, as you can see in the 100% update workload, which is represented by the green lines. This workload amounts to 50% read and 50% write, with the writes being the limiting factor. Although the two-volume test resulted in slightly lower application latency compared with the one-volume test, both volume counts converged at the same point: the VPC egress limit. This convergence worked out to be just around 130,000 Oracle IOPS.

The 100% read workload represents the upper limit for the Oracle database that was running against the NetApp Cloud Volumes Service in AWS. The pure read workload scenario, as represented by the red lines, is limited only by the instance type and by the amount of network and CPU resources that are available to the instance. Just as for the 100% update workload that we discussed, although the two-volume configuration resulted in lower application latency, the tested configurations converged at the instance resource limit. In this case, that convergence was just shy of 300,000 Oracle IOPS.

The third workload, represented by purple lines, is a 75% Oracle read and a 25% Oracle update, or a read/modify/write, workload. This scenario performed exceptionally well, with one or two volumes, though the two-volume scenario outperformed the one-volume scenario. Many database administrators prefer the simplicity of a single-volume database, and 200,000 IOPS should satisfy all but the most demanding of database needs—with the simple layout that database administrators want. For the most demanding workloads, additional volumes enable greater throughput, as shown in the graph.

The following are best practices for when you use Oracle and NetApp Cloud Volumes Service:

Start getting the high performance and durability that you need from your Oracle database, and make it easy by deploying NetApp Cloud Volumes Service. To learn more, view our on-demand webcast, How to Optimize Your Oracle Database Utilization with AWS and NetApp Cloud Volumes. Then get started by signing up for NetApp Cloud Volumes Service for AWS.

NetApp is the data authority for hybrid cloud. We provide a full range of hybrid cloud data services that simplify management of data across cloud and on-premises environments to accelerate digital transformation. Together with our partners, we empower global organizations to unleash the full potential of their data to expand customer touchpoints, foster greater innovation and optimize operations. For more information, visit https://bluexp.netapp.com.

For details about how Cloud Volumes Service delivers data durability, strong encryption, and high availability, read our first blog post in this series. That post also gives you some compelling specifics about NetApp Cloud Volumes Service Snapshot™ technology.

Here, in our third blog post, we focus on Oracle performance. Oracle is especially well suited to be deployed with the NetApp Cloud Volumes Service. With Cloud Volumes Service, your data is protected by eights nines of durability and is accessible across all the Amazon Web Services (AWS) Availability Zones within a given region. And Oracle Direct NFS Client seems almost purpose-built for the AWS architecture. We will give you more information about that aspect later.

Proven Performance

When you evaluate database workloads, you should always consider the impact of server memory. Latency from memory is orders of magnitude lower than from any disk query, so, ideally, queries find their data resident therein. What does this latency aspect mean for you? Storage latency matters, but memory hit percentage matters more. When your database administrators say that they need a certain latency level, you should keep that level in mind. And Cloud Volumes Service can help you meet or even exceed those expectations.

To back up our claim, we put NetApp Cloud Volumes Service and Oracle to the test.

We started with the knowledge that to meet load demands, Oracle Direct NFS Client spawns many network sessions to each NetApp cloud volume. The vast number of network sessions creates the potential for a significant amount of throughput for your database.

To test Oracle’s ability to generate load, we ran a series of proofs of concept across various Amazon Elastic Compute Cloud (Amazon EC2) instance types, with various workload mixtures and volume counts. The SLOB 2 workload generator drove the workload against a single Oracle 12.2c database.

Testing showed that any instance size is fine; you can run an instance size according to your needs. Oracle and NetApp Cloud Volumes Service can consume the resources of even the largest instance. To illustrate this point, the following graph focuses on the c5.18xlarge instance type.

AWS imposes an architectural egress limit of 5Gbps for all I/O that leaves the Virtual Private Cloud (VPC). This limit defines the lower threshold in the graph, as you can see in the 100% update workload, which is represented by the green lines. This workload amounts to 50% read and 50% write, with the writes being the limiting factor. Although the two-volume test resulted in slightly lower application latency compared with the one-volume test, both volume counts converged at the same point: the VPC egress limit. This convergence worked out to be just around 130,000 Oracle IOPS.

The 100% read workload represents the upper limit for the Oracle database that was running against the NetApp Cloud Volumes Service in AWS. The pure read workload scenario, as represented by the red lines, is limited only by the instance type and by the amount of network and CPU resources that are available to the instance. Just as for the 100% update workload that we discussed, although the two-volume configuration resulted in lower application latency, the tested configurations converged at the instance resource limit. In this case, that convergence was just shy of 300,000 Oracle IOPS.

The third workload, represented by purple lines, is a 75% Oracle read and a 25% Oracle update, or a read/modify/write, workload. This scenario performed exceptionally well, with one or two volumes, though the two-volume scenario outperformed the one-volume scenario. Many database administrators prefer the simplicity of a single-volume database, and 200,000 IOPS should satisfy all but the most demanding of database needs—with the simple layout that database administrators want. For the most demanding workloads, additional volumes enable greater throughput, as shown in the graph.

Best Practices

The following are best practices for when you use Oracle and NetApp Cloud Volumes Service:

- init.ora file

- db_file_multiblock_read_count

- Remove this option if it's present

- Redo block size as either 512 or 4KB

- Leave as default 512, unless recommended otherwise by App or Oracle

- If redo rates are greater than 50MBps, consider testing a 4KB block size

- Network considerations

- Disable flow control (relevant only to on-premises environments).

- Jumbo frames for 10GbE (relevant only to on-premises environments).

- Enable TCP timestamps, selective acknowledgment (SACK), and TCP window scaling on hosts.

- Slot tables:

- sunrpc.tcp_slot_table_entries = 128

- sunrpc.tcp_max_slot_table_entries = 65536

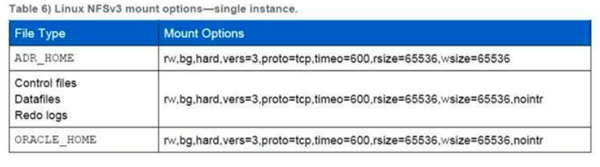

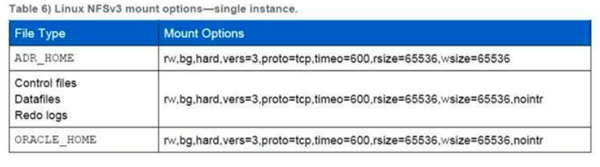

- You can find mount options that differ from the suggested Cloud Volumes Service options on the NetApp Cloud Central Cloud Volumes Service UI. The following table shows Direct NFS mount options for a single instance.

Boost Your Oracle Database Performance Today

Start getting the high performance and durability that you need from your Oracle database, and make it easy by deploying NetApp Cloud Volumes Service. To learn more, view our on-demand webcast, How to Optimize Your Oracle Database Utilization with AWS and NetApp Cloud Volumes. Then get started by signing up for NetApp Cloud Volumes Service for AWS.

About NetApp

NetApp is the data authority for hybrid cloud. We provide a full range of hybrid cloud data services that simplify management of data across cloud and on-premises environments to accelerate digital transformation. Together with our partners, we empower global organizations to unleash the full potential of their data to expand customer touchpoints, foster greater innovation and optimize operations. For more information, visit https://bluexp.netapp.com.