Subscribe to our blog

Thanks for subscribing to the blog.

September 5, 2017

Topics: Cloud Volumes ONTAP AWS6 minute readPerformance

Remember the old days, before the cloud was a reality?

Back then you would buy a storage system which included a package of appropriately-sized and tested hardware and its corresponding storage software. Your storage performance was guaranteed out-of-the-box, literally.

Actually, this is the way NetApp started, building easy-to-use, purpose-built storage appliances, which were something like toasters. That’s why we called the company Network Appliance.

Today, NetApp not only sells these FAS storage systems, but has extended the best storage OS in the industry as a software-defined storage management platform for AWS, Google Cloud, or Azure in Cloud Volumes ONTAP.

This offers great flexibility, easy consumption, a jump start into the cloud, and the possibility to run in the cloud more workloads that rely on traditional storage capabilities.

Sounds good so far, right? Almost.

Unlike the engineered systems (FAS)—where very smart storage experts picked a good mix of CPU power, network bandwidth, memory, and storage capacity and bundled it in small, medium, and large sizes to satisfy most workloads—now you are the person who has to size the underlying infrastructure.

How can you find the right sizing to ensure you get the best storage performance? Let me give you a helping hand. This post will walk you through all of the basics of performance sizing for Cloud Volumes ONTAP.

Capacity Sizing

This is the easy part. The cloud makes capacity sizing much easier than it is with FAS. Simply provision enough capacity to fulfill your requirements.

The cool “cost sizer” for AWS or Azure —a tool I use every day—does a good job on deciding which license types handle the required capacity, taking storage efficiency into consideration.

On a high level, that’s all you have to do to take care of capacity sizing. Now let’s move on to the tricky part: performance sizing.

Storage Performance Sizing

Storage performance depends a lot on appropriately sizing for performance. This is the hard part. It starts with the fact that most people think storage performance equals throughput. Start a “dd” and measure Mbps.

If your workload is about writing gigabytes of data in large, sequential chunks (256KB and bigger), this is a reasonable test. You might want to do multiple “dds” from multiple machines to eliminate single client bottlenecks.

Most of the time you usually run much more complex workloads, a mix of read and write and different block sizes (mostly between 4-64k). A database has a completely different workload mix compared to file sharing—for example, SAP HANA has a very different mix than a typical Oracle database—however, the storage performance metrics are the same.

These metrics are IOPS (Input/Output operations per second), latency, block size, and concurrency.

A quick reminder:

- Throughput is IOPS x block size

- IOPS is 1/latency x concurrency

- Block size and concurrency are determined by the application

- Latency is a result of raw infrastructure latency and caching

I want to give you some guidelines to pick the right virtual components to use to achieve the desired outcome.

In contrast to our FAS controllers we don’t have precise numbers on how different cloud instance types perform. Remember? You chose SDS to pick the hardware yourself…

Let’s approach it from a conceptual data flow perspective and look at the performance impacting parameters.

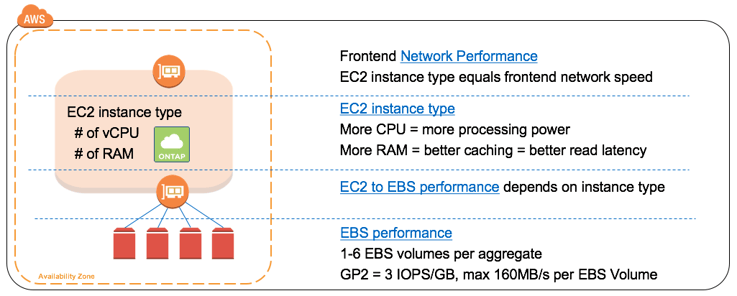

To make it easier, I will use AWS terminology. The names on Azure are different, the concepts are the same. Below you’ll see a conceptual picture of Cloud Volumes ONTAP on AWS:

An IO request (NFS/SMB/iSCSI) will arrive at the front-end NIC. Cloud Volumes ONTAP running in the Amazon EC2 instance uses the CPU to do its storage thing and spare memory to cache reads from the backend Amazon EBS disks.

For reads, Cloud Volumes ONTAP needs to fetch the relevant data either from the backend Amazon EBS disks (cache miss) or from memory (cache hit). Write jobs are always first persisted to backend Amazon EBS before acknowledging the write to the client.

Each of these layers have potential bottlenecks you need to take into consideration when choosing the right components. Fortunately, these limits are (well) documented in AWS documentation;

- EC2 instance types: # of CPU, memory, front-end network performance

- EC2 to EBS Performance: throughput, max IOPS

- EBS Performance: IOPS/GB, Mbps/GB, limits per volume and instance

Again, it makes sense to look at throughput and IOPS.

Throughput

For larger block sizes, the limiting factor is mostly the front-end network of the EC instance. Depending on the instance type, AWS specifies the network throughput with “moderate,” “high,” and “(up to) 10 gigabit.”

The real-life throughput is not specified; you need to test it for your environment. My test showed around 160Mbps for “high.” If you need more, you need to pick a bigger instance type.

Also consider the backend throughput of the Amazon EC2 instance to Amazon EBS. You need enough backend bandwidth.

IOPS

IOPS are limited by the capability of the application to generate IOPS and by the backend to deliver these IOPS.

For a single workload, concurrency is defined by the application and cannot be changed. This means latency becomes the key factor which defines achievable IOPS. For read workloads, latency can be improved by caching, which means more RAM for the Amazon EC2 instance.

For writes, the latency is defined by the time the IO requires to travel to the instance, the processing time within the instance, and the time the instance needs to persist it to the backend storage.

In the absence of battery-backed DRAM you would have on FAS, this needs to be done to Amazon EBS instead, which incurs around 1ms of latency. As an example, this limits the achievable IOPS for a single threaded application (concurrency = 1) to ~1000 IOPS.

RAM and CPU are usually plenty on the supported Amazon EC2 types and are sufficient to serve ten thousands of IOPS. Beside the infrastructure latency, the number of IOPS you get from the backend EBS storage is the biggest limiting factor. This is the place you can easily screw up.

According to the Amazon EBS documentation, you get 3 IOPS/GB for GP2 (=SSD) storage.

The more capacity you provision, the more IOPS you can drive. At 1TB, it results in 3000 IOPS. Below 1TB, there is a mechanism which allows to burst more IOPS for a given IOPS amount, which is good for spik y workloads, but trashes benchmark results.

Size the capacity to achieve the required IOPS sustainable. Also choose an EC instance which is Amazon EBS-optimized, since it gives better performance to EBS.

Putting It All Together

For bandwidth (= large sequential IO) pick an Amazon EC2 instance type with enough front-end network performance. Choose an Amazon EBS-optimized instance and consider the available bandwidth to Amazon EBS. Add enough capacity and volumes to drive the bandwidth.

For IOPS (= small random IO) pick an Amazon EC2 instance with enough front-end network performance and memory (the more, the better).

Choose an Amazon EBS-optimized instance and consider the available bandwidth to Amazon EBS. Add enough Amazon EBS volumes and capacity to achieve IOPS.

Start with the requirement and size along the data path, eliminating bottlenecks along the way. Since virtual infrastructures are not as precisely-defined as a given hardware box, expect variations in the results you achieve. Therefore, plan for some headroom.

Always do a proof-of-concept to test out if our expectations meet reality!

Want to get started? Try out Cloud Volumes ONTAP today with a 30-day free trial.