Subscribe to our blog

Thanks for subscribing to the blog.

April 19, 2017

Topics: Cloud Sync Cloud StorageData Migration6 minute read

Big Data analytics is an essential service for all enterprise customers. Analyzing huge amounts of data provides otherwise hidden insights and increases revenue.

In the past, this meant investing significant amounts of money in building data centers to make sufficient resources available for such operations.

Public cloud providers, on the other hand, offer managed services to address this type of challenge by offering options to offload these tasks from on-premises data centers to the cloud.

By using cloud services, enterprises can leverage the benefits of cloud elasticity and the pay-per-use model to lower their overall expenses and transfer CapEx to OpEx.

Meet: Elastic MapReduce (EMR).

Amazon EMR Services

Amazon EMR is a managed cloud service built on the Hadoop framework, intended to allow fast and cost-effective processing and analyzing of large data sets.

Its main purpose is to offload installation and operation management to the cloud provider, and to allow on-demand provision of virtually endless compute instances to complete the data analysis in a timely manner.

Utilizing cloud services for Big Data analysis such as EMR, requires organizations to consider parameters such as security, performance and data transfer to and from the cloud.

When it comes to data transfer to the cloud, existing methods for data transfer often require deep technical knowledge and significant time to implement.

Meet: NetApp Cloud Sync - which simplifies and expedites data transfer to AWS S3 so services such as AWS EMR can be utilized quickly and efficiently.

Synchronizing Data to S3 with NetApp Cloud Sync

Cloud Sync is designed to address the challenges of synchronizing data to the cloud by providing a fast, secure, and reliable way for organizations to transfer data from any NFSv3 or CIFS file share to an Amazon S3 bucket.

After running required AWS services such as EMR, Cloud Sync can also be leveraged to sync the data and the results back to the on-premises.

Cloud Sync works not only with NetApp storage, but with any NFSv3/CIFS share. It is responsible for the entire synchronization process, from converting file-based NFS/CIFS data sets into an object format that is used by S3, creating initial replication, and incrementally updating changes on both sides, to returning the resulting dataset.

Cloud Sync offers an intuitive, web-based interface to manage the entire process of creating an initial transfer, scheduling incremental updates, and monitoring.

It takes a step-by-step approach and offers additional assistance in the form of links, chat support and documentation for all aspects of the process.

Cloud Sync offers a 14 day free trial and get be accessed here.

NFS to S3 Data sync with Cloud Sync- example:

Three components need to be defined and configured in order to use Cloud Sync to transfer data:

- The NFS server

- The Data Broker

- The S3 bucket

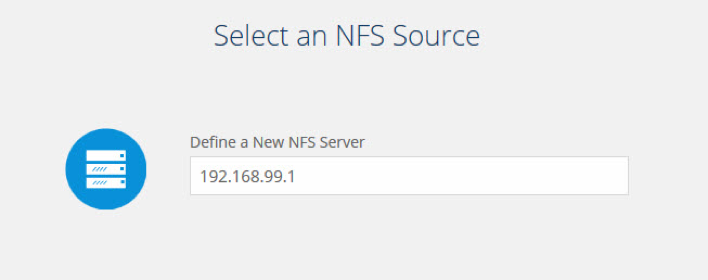

The first step when creating synchronization from NFS server to S3 is to configure the NFS server. You can specify either an IP address or hostname that Data Broker can resolve and access.

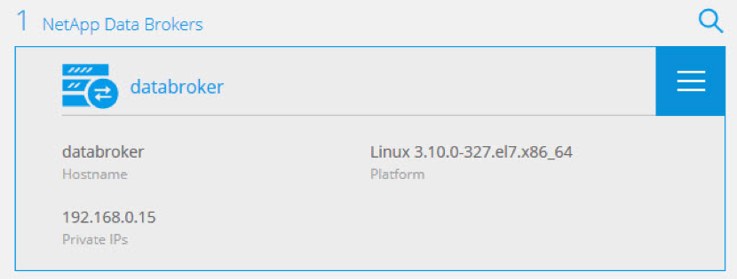

Data Broker is an application that is responsible for the actual data transfer between the NFS volume and the S3 bucket.

This component can be located in a company's data center or provisioned in your AWS VPC. It needs to have access to the NFS server in order to list NFS exports and read/write files on it (write permission is needed if results are to be synced back to the NFS server).

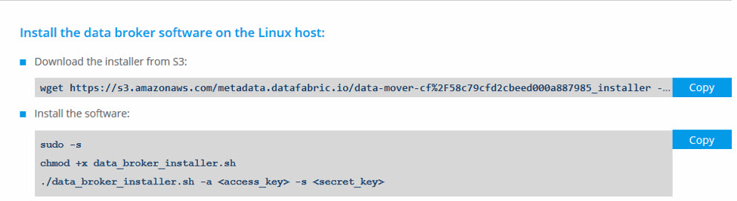

Data Broker for AWS is deployed using a template; the user only needs to select the network parameters (VPC and subnet). On-premises, Data Broker can be installed on a Red Hat Enterprise Linux 7 or Centos 7 Linux machine.

To successfully install and use Data Broker, the following AWS objects must be defined:

- Create an AWS user with programmatic access and record the Access Key and Secret Access Key

- Create an AWS policy, either from a JSON file provided by NetApp or manually, and link the policy to the user account

Creation of an on-site Data Broker generates a personalized installer download link and requires an administrator to run the Installer on the local machine.

The Installer will install all prerequisites and start services. After the services are started, Cloud Sync will retrieve the data from Data Broker and display it as online.

The next step is to select the NFS share from the server that will be synced and specify in which bucket the data will be stored.

The list of available buckets is obtained using the Access Key and Secret Access Key specified during the Data Broker installation. Optionally, tags for replication may be created.

Performance

Fast data transfer is a major benefit of Cloud Sync.

This is accomplished through parallelization of work. It walks through directory structure in parallel, transmits files in parallel, and converts from file-based storage to object storage in real time.

NFS operations executed by the Data Broker are optimized and tuned for efficient access and transfer. Cloud Sync keeps the catalog of data, and subsequently transfers and syncs only changed data.

Administrators have complete control over the synchronization schedule and can run incremental transfer, either automatically at specified intervals or manually when needed.

This means that transferring large data sets from on-premises NFS to S3 and back takes significantly less time than with other available methods.

Security

To better understand the security of data transfer provided by Cloud Sync, you need to get into its data flow. All IO operations performed by the service are carried out by Data Broker.

The Cloud Sync service is only responsible for managing the process. Having a Data Broker, either in AWS VPC or on-premises, means that data at all time stays within the company’s security boundary because all connections from AWS to the on-premises datacenter are created either through a secure VPN or by using AWS Direct Connect.

All communications between the Cloud Sync service and S3 are enabled by the secure API provided by Amazon.

Consuming Synchronized Data by using EMR

Once the data is in the S3 bucket, it can be attached to an existing EMR cluster or it can be used to provision a new cluster and use that data. An AWS EMR cluster can be created using the AWS console or it can be automated using AWS CLI.

For example, let's assume that a company needs to analyze log files from network devices collected on an NFS share, and chooses to use EMR for this purpose.

The company has used Cloud Sync to replicate their files from NFS to Logs S3 bucket, and prepared an analyze Threats.py Python script for this purpose.

Analysis output is stored in the output folder inside the bucket.

By using the following AWS CLI command, it is possible to provision a cluster, execute script, store output, and terminate the cluster:

aws emr create-cluster --steps Type=STREAMING,Name='Streaming Program',ActionOnFailure=CONTINUE,Args=[-files,s3://Logs/scripts/analyzeThreats.py,-mapper,analyzeThreats.py,-reducer,aggregate,-input,s3://Logs/input,-output,s3://Logs/output] --release-label emr-5.3.1 --instance-groups InstanceGroupType=MASTER,InstanceCount=1,InstanceType=m3.xlarge InstanceGroupType=CORE,InstanceCount=2,InstanceType=m3.xlarge --auto-terminate

Since Cloud Sync makes it is easy to set up a sync back from S3 to NFS, results can be transferred to local NFS server for user consumption.

Summary

Having an option to synchronize data between NFS/CIFS volumes and Amazon S3 buckets in a fast and secure manner, and use that data to run AWS EMR jobs will lead Enterprises to rethink their Big Data strategy.

Leveraging the NetApp Cloud Sync service brings all the benefits of AWS EMR processing, such as cost savings, ease of deployment, and flexibility, one step closer.