Subscribe to our blog

Thanks for subscribing to the blog.

September 6, 2018

Topics: AWS4 minute read

Like many of you, I heard the news of the new Provisioned Throughput offering in Amazon Elastic File System (EFS) and had my curiosity piqued. See Amazon’s own documentation for a high-level overview of this new offering. Basically, Amazon has recognized that there is a use case in which application performance needs are decoupled from capacity. With this realization, they have decoupled performance from capacity and said, “Go.”

Amazon’s EFS Provisioned Throughput mode addresses its target well—light-capacity applications now get their bandwidth needs met. Here are the details:

What type of applications benefit from Provisioned Throughput mode?

What type of applications benefit from Provisioned Throughput mode?

Amazon mentions development tools, web serving, and content management applications specifically. These are applications that they identified as having low capacity yet high bandwidth needs. OK, the bandwidth requirements don’t need to be all that high—just higher than the Burst mode can support at such low capacity levels.

For those of you wondering what else has changed, the answer is nothing. You still get the latency you are used to with Amazon EFS. The file-system-wide Amazon EFS IOPS limits are still in place—7,000 IOPS per file system—as are the number of file locks, no support for SMB, and lack of snapshots. And each instance still has a cap of 250MiB per second (MiBps) throughput per file system.

Amazon EFS thus leaves applications such as OLTP databases hungry for IOPS. OLTP systems are often the nerve center of an enterprise because they can include financial transaction systems, automated teller machines (ATMs), and retail sales. As such, OLTP systems need high performance and reliability. The same is true for high file count applications such as electronic design automation (EDA) suites or even code repositories such as Git or version control systems. Workloads that include a random seek component are problematic in general, such as queries against genomic databases—think WuXi NextCODE’s Genomically Ordered Relational Database.

Amazon gives the following limits:

The 250MiBps per volume per instance limits might be problematic for applications requiring single file system access. Applications that can use multiple mount points, on the other hand, have an advantage when using Amazon EFS with Provisioned Throughput mode. MongoDB is an example of an application requiring single file system access per database. MongoDB is hungry for bandwidth, yet is limited to 250MiBps. Hadoop Spark instances and machine learning workflows are examples of scenarios that can use multiple file systems per instance. By leveraging many file systems per instance, the full bandwidth of the instance can be consumed.

In this day of ransomware, snapshots are more vital than ever to protect against loss of data. The classic needs such as protection from user error have not gone away, and neither has the business need for test/dev environments, which are duplicates of the production system. It would have been nice to see the lack of file system snapshots with Amazon EFS addressed in this version.

Amazon EFS’s lack of SMB support leaves a hole when it comes to home directories on Windows machines. The same is true for any other application that requires shared storage accessible from Microsoft Windows operating systems.

So, what is the overall solution for your application’s unmet needs—be they cost, recoverability, Windows support, performance, and so on? Enter the NetApp® Cloud Volumes Service for AWS, which offers the following benefits (so far):

For Cloud Volumes Service pricing per service level, visit us at NetApp Cloud Central.

Ultimately, each of us is looking for technology that will help us solve our business needs more quickly, less expensively, and, ideally, with less complexity. The Provisioned Throughput mode addresses the complexity issue involved in sizing with Burst mode, which is less than straightforward. I fully admit that on the surface, Provisioned Throughput mode seems much more expensive than its Burst mode counterpart. That’s a mirage; the two modes are equivalently priced.

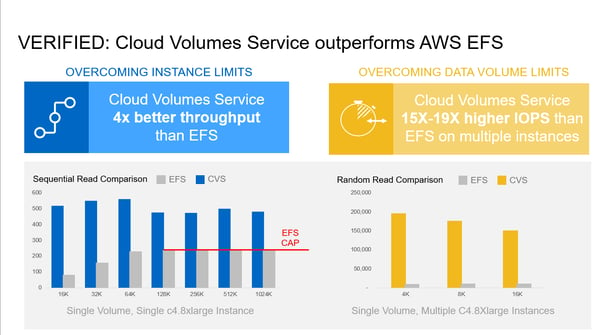

So, what about speed? Is the Provisioned Throughput mode faster? More consistent, yes, more predictable, yes, but faster, no. But that’s OK; NetApp Cloud Volumes Service for AWS is a yes across the board.

Come get your application needs met. Check us out at NetApp Cloud Volumes Service for AWS.

Amazon’s EFS Provisioned Throughput mode addresses its target well—light-capacity applications now get their bandwidth needs met. Here are the details:

- Bandwidth: Allocate anywhere from 1MiB to 1024MiB of bandwidth per file system.

- Bandwidth list price: $6.00 per MiB per month charged independent of capacity.

- Storage capacity list price: $0.30 per GiB per month.

- Caveat: Pay only for bandwidth not provided by the associated storage capacity. (You get 1MiB of sustained bandwidth with every 20GiB of used space.)

- Cost control: AWS has enabled cost-cutting measures by allowing you to toggle between the Provisioned Throughput mode and the more classic Burst mode, but no more than once per day.

What type of applications benefit from Provisioned Throughput mode?

What type of applications benefit from Provisioned Throughput mode? Amazon mentions development tools, web serving, and content management applications specifically. These are applications that they identified as having low capacity yet high bandwidth needs. OK, the bandwidth requirements don’t need to be all that high—just higher than the Burst mode can support at such low capacity levels.

For those of you wondering what else has changed, the answer is nothing. You still get the latency you are used to with Amazon EFS. The file-system-wide Amazon EFS IOPS limits are still in place—7,000 IOPS per file system—as are the number of file locks, no support for SMB, and lack of snapshots. And each instance still has a cap of 250MiB per second (MiBps) throughput per file system.

Amazon EFS thus leaves applications such as OLTP databases hungry for IOPS. OLTP systems are often the nerve center of an enterprise because they can include financial transaction systems, automated teller machines (ATMs), and retail sales. As such, OLTP systems need high performance and reliability. The same is true for high file count applications such as electronic design automation (EDA) suites or even code repositories such as Git or version control systems. Workloads that include a random seek component are problematic in general, such as queries against genomic databases—think WuXi NextCODE’s Genomically Ordered Relational Database.

Amazon gives the following limits:

The 250MiBps per volume per instance limits might be problematic for applications requiring single file system access. Applications that can use multiple mount points, on the other hand, have an advantage when using Amazon EFS with Provisioned Throughput mode. MongoDB is an example of an application requiring single file system access per database. MongoDB is hungry for bandwidth, yet is limited to 250MiBps. Hadoop Spark instances and machine learning workflows are examples of scenarios that can use multiple file systems per instance. By leveraging many file systems per instance, the full bandwidth of the instance can be consumed.

In this day of ransomware, snapshots are more vital than ever to protect against loss of data. The classic needs such as protection from user error have not gone away, and neither has the business need for test/dev environments, which are duplicates of the production system. It would have been nice to see the lack of file system snapshots with Amazon EFS addressed in this version.

Amazon EFS’s lack of SMB support leaves a hole when it comes to home directories on Windows machines. The same is true for any other application that requires shared storage accessible from Microsoft Windows operating systems.

So, what is the overall solution for your application’s unmet needs—be they cost, recoverability, Windows support, performance, and so on? Enter the NetApp® Cloud Volumes Service for AWS, which offers the following benefits (so far):

- Multiprotocol support (NFSv3 and SMB) provides access from both Linux and Windows operating systems.

- Fast, no-impact NetApp Snapshot™ copies provide not just a point-in-time view of the file system, but the ability to revert on demand (through an API), create replica file systems, or simply extract previous versions of individual files from the .snapshot directory at the root of the file system.

- Service-level-controlled bandwidth limits of up to 3,500MiB per second per file system. Each Amazon EC2 instance can consume up to 1024MiBps (512MiBps read + 512MiBps write) or up to ~55,000 IOPS. The service also offers a predictable low latency regardless of I/O type (think millisecond-ish), which is perfect for IOPS-hungry applications such as OLTP databases.

For Cloud Volumes Service pricing per service level, visit us at NetApp Cloud Central.

Ultimately, each of us is looking for technology that will help us solve our business needs more quickly, less expensively, and, ideally, with less complexity. The Provisioned Throughput mode addresses the complexity issue involved in sizing with Burst mode, which is less than straightforward. I fully admit that on the surface, Provisioned Throughput mode seems much more expensive than its Burst mode counterpart. That’s a mirage; the two modes are equivalently priced.

So, what about speed? Is the Provisioned Throughput mode faster? More consistent, yes, more predictable, yes, but faster, no. But that’s OK; NetApp Cloud Volumes Service for AWS is a yes across the board.

Come get your application needs met. Check us out at NetApp Cloud Volumes Service for AWS.