- Product

- Solutions

- Resources

LEARN

- Cloud Storage

- IaaS

- DevOps

CALCULATORS

- TCO Azure

- TCO AWS

- TCO Google Cloud

- TCO Cloud Tiering

- TCO Cloud Backup

- Cloud Volumes ONTAP Sizer

- Azure NetApp Files Performance

- Cloud Insights ROI Calculator

- AVS/ANF TCO Estimator

- GCVE/CVS TCO Estimator

- VMC+FSx for ONTAP

- NetApp Keystone STaaS Cost Calculator

BENCHMARKS

- Azure NetApp Files

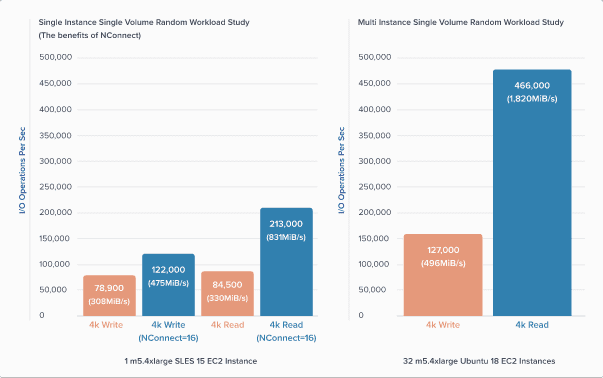

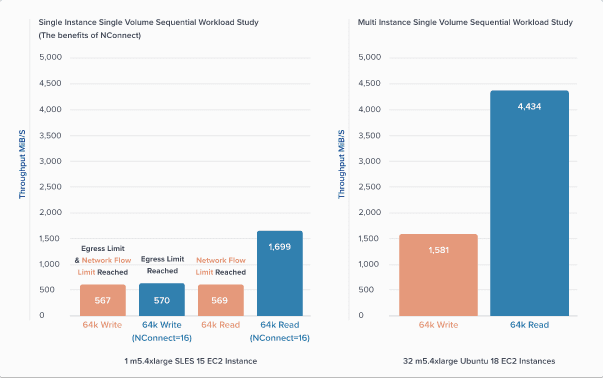

- CVS AWS

- CVS Google Cloud

- Pricing

- Blog

TOPIC

- AWS

- Azure

- Google Cloud

- Data Protection

- Kubernetes

- General

- Help Center

- Get Started