Subscribe to our blog

Thanks for subscribing to the blog.

June 22, 2017

Topics: Cloud Volumes ONTAP AWS7 minute read

Several hundred hours of high definition video are uploaded to YouTube every single minute.

Those videos are then seamlessly distributed around the world to multiple data centers, ensuring high-performance streaming to a global user base while also being backed up in case of outages.

Amazon’s cloud file storage product AWS S3 now stores file numbers in the trillions, serving more than one million requests on those objects per second.

Welcome to 2017, the era of exponential storage growth and backup needs. With these new challenges, more businesses are beginning to understand the benefits of utilizing expansive cloud computing networks (public or private) as part of their infrastructure strategies.

To truly understand the acceleration of cloud adoption, let’s take a look back at the timeline of the storage industry.

In this article, we’ll explore the history of enterprise storage and data center technology in order to gain a more comprehensive view of this rapidly changing marketplace.

1. DAS: In the Beginning

We’ll start our journey with direct attached storage. The original Monolithic IBM mainframes, the first true enterprise computers, used directly attached storage.

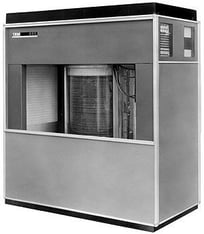

1956 marked the release of magnetic hard disks from IBM and EMC. These first hard disks were the size of a couple of refrigerators, topping out capacities at around 4MB.

To the right: The IBM 350, the world's first hard drive (1956) (Source: Pinterest)

Over the years, as technology improved and disks shrunk, they were put into “large” arrays and connected directly to the servers that needed them. Aside from storage, the major limitation at that time was the length of cables until the advent and implementation of direct fiber channels.

This architecture posed an organizational problem, as it led to arrays scattered about the data center wherever a server resource needed them. If there was not enough free space in an active array, an additional array would have to be cabled up somewhere nearby.

It’s quite obvious to see the trouble with this kind of storage architecture. If you need to attach disks to every new application server, scaling up capacity becomes a 1-to-1 ratio in terms of hardware and performance.

Plus, after several rounds of capacity increases and new hardware, it often became near impossible to maintain an organized cable management system.

2. Centralized Storage Takes Center Stage

Down the line, a solution emerged that addressed a lot of the troubles with directly attached storage arrays. That solution was a combination of Storage Area Networks and then Network Attached Storage devices.

The Storage Area Network (SAN) connected storage arrays over a network so their resources could be shared and accessed by any of the servers in the data center. This eliminated the physical organizational problems caused by DAS as servers and storage could be kept separately.

If you needed increased capacity, it was just a matter of adding more disk to the SAN.

Network Attached Storage (NAS) is often confused with SAN, but there are notable differences between the two. NAS includes its own physical hardware peripheral referred to as a “head.” The head runs a simple OS and has the job of connecting to the local network, authenticating requests from servers and managing the file operations on the storage drives.

By the mid-1990s, a new networking technology rose in popularity because it enabled the linking of SANs across more than just one data center location. Fiber channel channeling uses fiber optics (light) to transmit a signal.

This enables fiber channel cables to offer throughputs of well over 15x that of the ethernet technology in its time. Companies that needed to transmit large files or images embraced this technology immediately.

3. The Evolution of the Hard Disk and Enterprise Networking

.png?width=243&name=In%20addition%20to%20high%20speeds%20and%20multiple%20ports,%20SAS%20drives%20also%20communicate%20with%20SATA%20and%20SCSI%20devices%20without%20issues-02%20(2).png)

As hard disk technology improved, so did the means of connecting and attaching the storage. The 1980s brought us not only creative hairstyles and immortalized pop music, but also the interface that would become a standard for the next several decades.

The Small Computer System Interface (SCSI) used a parallel bus design and was widely used in drive connectivity for close to 20 years.

Serial Attached SCSI (SAS) was the higher-performing improvement of SCSI. It supported duplex signal transmission of 3.0Gb/s as early as 2005 and now boasts speed upwards of 12Gb/s.

Also notable was the inclusion of two data ports providing a failover/redundancy feature.

In addition to high speeds and multiple ports, SAS drives can also communicate with SATA and SCSI devices without issues.

SATA provided connections between devices with a single and smaller cable size that improved management and installation of devices. It also upped the length of cable possible over its predecessor ATA. Nowadays, SATA drives are often used in conjunction with faster SAS drives to provide cheaper and larger storage for older data.

Central storage started on a path of increasing prevalence as the technology improvements and business needs of enterprise converged. WIth increased storage and decreased size, it started to make sense to have storage resources that were shared by multiple servers.

This way servers could be increased separately to handle application scale, while storage could either be maintained or upgraded much more simply.

The history of flash storage should also be noted. Originally invented in 1894, it wasn’t until 1991 that NAND flash memory was presented. This configuration allowed for a much lower cost/size ratio that triggered exponential growth in memory size over the next decade.

The special thing about this kind of memory was that unlike dynamic RAM, it kept information on it even after its host device was powered off.

Few at this point in time foresaw that flash memory would ultimately make its way into competing with the large-sized hard drives of enterprise storage.

4. The Cloud Movement: Centralization Goes Further

Our journey culminates with cloud storage services such as Amazon’s Elastic Block Storage (EBS). These services essentially make a very large and high-performing NAS system available to even the smallest and lowest budget user.

In a matter of moments, a company could ramp their storage capacity up exponentially if it suited their business needs; and all of this is possible without buying and cabling any new hardware.

Aside from EBS, Amazon offers several storage types to accomplish different users’ needs. Choosing the right cloud storage mix is the first step an organization must take when beginning to work with a public cloud infrastructure.

Beneath the simple web interface, quite a lot is going on in a service like EBS. Yes, the massive amounts of hard disks are spinning in the background—you can opt for SSD volumes up to 16 TB and 20,000 IOPS — there is added complexity to improve reliability and performance as well.

With AWS EBS, volumes will automatically replicate within their regional zone. This protects you from individual drive failures on storage arrays, but not from the very rare regional outage.

For many, the cloud can be used for simple and expansive offsite archive storage. Services like Amazon Glacier pass the incredible cost savings of advancements in storage capacities on to the end user for long term archiving of their onsite data.

On the Horizon: Backups and Organization

On a hardware level, all these arrays are simply large collections of hard drives, but from a software management perspective technologies vary quite a bit for each cloud provider.

The rapid expansion of storage available has forced major storage users to address two key problems.

- The first is in organization of endless storage arrays and drives. Providers need a way to synergize their resources effectively and make them usable by a large amount of users. This is where systems like Ceph come into play and attempt to unite enterprise storage devices into a much more usable entity.

- The second major concern is performing efficient backups and recoveries on exponentially growing datasets. As the Infrastructure-as-a-Service (IaaS) market continues to grow and become the primary enterprise storage method, the challenges of backing up customer data and being able to recover it quickly also grow. As competition between cloud storage providers heats up, those that master backups for their customers will ultimately emerge the winners.

The Power of Enterprise Storage

The true power of cloud-based storage is that it brings together the incredible capacity of centralized block storage with an “on-demand” service model.

Cloud is more of a paradigm shift in business than a brand new architecture for storage.

We’ve reviewed how centralized storage has, in fact, been around for quite a long time. The big difference is that our new cloud-storage world allows companies to completely focus on their business without diverting huge resources and time to physical infrastructure.

Whether you're thinking about moving to the cloud, or already have, watch the on-demand webinar to learn more about migrating your SQL applications to and from the cloud.

Want to get started? Try out Cloud Volumes ONTAP today with a 30-day free trial.